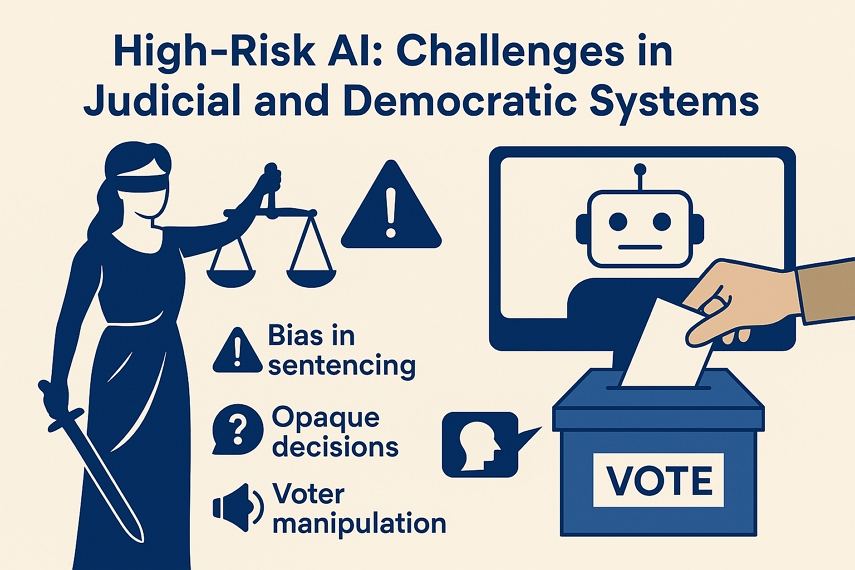

AI in Justice & Democracy: High-Risk Pitfalls Under the EU AI Act

When an AI risk-assessment tool falsely labeled a US defendant "high-risk" due to racial bias (ProPublica, 2016), the world witnessed AI's dark potential in sensitive domains. The EU AI Act classifies judicial and democratic AI systems as "high-risk" precisely because errors here threaten fundamental rights and social trust.

In such sensitive fields, how do we harness the power of AI without sacrificing fairness, transparency, or human rights? This article dives into current applications, critical risks, and responsible strategies for navigating AI in high-risk areas.

Part 1. Current AI Applications in High-Risk Domains

AI is increasingly being integrated into decision-making systems across sectors. In judicial and democratic settings, it promises speed and efficiency-but at a price.

1. In the Justice System

- Legal Document Analysis: AI streamlines case prep by processing thousands of legal files.

- Predictive Policing: Algorithms like PredPol and others map potential crime hotspots.

- Risk Assessment Tools: The U.S.-based COMPAS algorithm estimates recidivism risk to guide bail and sentencing decisions.

- Automated Evidence Handling: AI can help flag relevant items in surveillance footage or digital data.

- Virtual Judges: Tools like DoNotPay, an AI chatbot, handle small claims and appeals in a conversational format.

2. In Democratic Processes

- Voter Behavior Prediction: Campaigns use AI to track voter trends and opinions.

- Targeted Political Ads: Microtargeting enables hyper-personalized political messaging.

- Disinformation Bots: AI-generated content spreads misleading narratives automatically.

- Content Moderation: Platforms deploy AI for auto-flagging or removing sensitive political speech.

- Election Forecasting: Predictive models influence campaign strategy and voter expectations.

Part 2. Critical Risks: Why the EU Flags These as "High-Risk"

The EU AI Act's high-risk classification is rooted in AI's potential to harm fundamental rights, erode public trust, and impact personal freedoms. In justice and democracy, the stakes are especially high.

1. Justice-Specific Threats

- Amplification of Bias: AI trained on historical legal data can reinforce existing inequalities (e.g., racial bias in sentencing).

- Lack of Transparency: "Black-box" models violate due process when outcomes can't be explained or contested.

- Accountability Gaps: When errors occur, it's unclear whether blame lies with developers, institutions, or the algorithm itself.

2. Democracy-Specific Threats

- Manipulation of Public Opinion: Algorithmically designed propaganda shift narratives.

- Erosion of Institutional Trust: Disinformation and AI-fueled chaos degrade faith in elections and democratic systems.

- Digital Exclusion: Biased algorithms may marginalize certain communities or suppress minority opinions.

3. Cross-Domain Dangers

- Data Misuse: Sensitive biometric or behavioral data may be used without consent.

- Security Threats: AI systems in public infrastructure are vulnerable to cyberattacks.

- Overdependence: Over-reliance on AI can weaken critical human reasoning and oversight.

Part 3. Key Risks of Using AI in These Fields

AI's efficiency comes with trade-offs, particularly in fields where fairness, justice, and democracy are at stake.

1. Risks in the Judicial System

- Sentencing Bias: AI trained on flawed data can perpetuate or worsen systemic discrimination.

- Opacity in Decision-Making: AI-driven verdicts often lack explainability, undermining legal transparency.

- Erosion of Human Judgment: Judges and lawyers might over-rely on algorithmic recommendations, reducing contextual nuance.

2. Risks in the Democratic System

- Manipulation via AI-Generated Content: Fake videos, doctored images, and misleading news can sway voters.

- Loss of Electoral Trust: People may distrust results if AI's role in shaping narratives is hidden.

- Polarization via Microtargeting: AI tailors content to echo voter biases, exacerbating societal divisions.

Part 4. Mitigation Strategies: Complying with the EU AI Act

The EU AI Act mandates a set of safeguards for high-risk AI uses. Compliance isn't just a legal requirement-it's a moral one.

1. Mandatory Safeguards

- Human Oversight: AI should never make final decisions without human validation.

- Bias Testing & Transparency Logs: Developers must audit their models and disclose decision-making logic.

- Data Governance: All data usage must align with GDPR standards and ethical consent practices.

2. Proactive Best Practices

For Governments:

- Independent Algorithm Audits: Third-party evaluations ensure neutrality and accuracy.

- Public AI Registries: Citizens should know which high-risk systems are deployed and where.

For Legal Professionals:

- Use Explainable AI (XAI): Tools should provide clear, interpretable justifications for outputs.

- Monitor Bias Continuously: Even approved systems need ongoing scrutiny to avoid regression.

Conclusion

Deploying AI in justice and democracy demands more than compliance-it requires ethical vigilance. Tools must enhance human judgment, not replace it. As Article 1 of the EU AI Act reminds us: "AI should serve people, not the reverse." Institutions adopting AI must prioritize transparency audits (e.g., HitPaw VikPea's compliance-ready bias detection) and multi-stakeholder governance. Only then can we prevent Loomis v. Wisconsin-style injustices and safeguard democracy from algorithmic subversion.

HitPaw Univd (Video Converter)

HitPaw Univd (Video Converter) HitPaw VoicePea

HitPaw VoicePea  HitPaw FotorPea

HitPaw FotorPea

Share this article:

Select the product rating:

Daniel Walker

Editor-in-Chief

This post was written by Editor Daniel Walker whose passion lies in bridging the gap between cutting-edge technology and everyday creativity. The content he created inspires the audience to embrace digital tools confidently.

View all ArticlesLeave a Comment

Create your review for HitPaw articles