AI Voice Imitation in Podcasts: Legal Boundaries and Safe Guide

AI voice synthesis is transforming podcasting, making it easier than ever to create lifelike narration, mimic iconic voices, or even build entire shows around artificially generated speech. But with great creative power comes growing legal complexity. Can you legally mimic a celebrity's voice using AI? Is it fair use, parody, or a violation of someone's publicity rights?

This article examines the legal limitations of utilizing AI-generated voices in podcasting, particularly when imitating public figures. Through real-world cases and current regulations, we'll break down what's allowed, what's not, and how podcasters can avoid legal trouble while enhancing their shows using tools.

Part 1: Cases where Voice Imitation Crossed the Legal Line

In early 2024, an AI-generated podcast episode went viral for all the wrong reasons. Titled "The Joe Rogan Experience-but Fully AI," the episode featured an entirely synthetic version of Joe Rogan's voice, interviewing an AI-generated voice mimicking Elon Musk. While the content was technically labeled as parody, the episode's ultra-realistic sound and professional production blurred the lines between satire and deception.

Listeners were confused. Some believed it was a leaked episode. Others accused the creators of impersonation. Spotify and YouTube removed the content within days following public backlash and likely pressure from the individuals' legal teams.

This wasn't an isolated incident. Several deepfake voice clips mimicking politicians, celebrities, and influencers have surfaced, often used in political misinformation campaigns or fake endorsements. The issue lies not just in mimicking the voice, but in how it's used-especially if it's done without permission and in a commercial or misleading context.

Such cases demonstrate that AI voice imitation can cross into legally risky territory, particularly when:

- The voice sounds convincingly real,

- It's presented without clear disclosure,

- Or it's used to profit off someone's identity or reputation.

These scenarios raise urgent questions about whether a person's voice is protected under copyright, personality rights, or publicity law-and if so, where the boundaries of "creative imitation" truly lie.

Part 2: What Are the Copyright and Personality Rights for AI Voices?

The human voice, while not protected by copyright itself, is increasingly being recognized in law as part of a person's identity-particularly in cases involving celebrities, politicians, or public figures. When AI is used to replicate someone's voice without consent, especially in a way that confuses, misleads, or exploits, it may cross into legal infringement.

Copyright Law: Voices, unlike written scripts or music, are generally not covered by copyright unless part of a recorded performance. This means you can't copyright a raw vocal tone, but you can protect a unique vocal performance as intellectual property.

Right of Publicity (U.S.): This legal doctrine protects an individual's name, likeness, and sometimes voice from unauthorized commercial use. States like California and New York specifically recognize the voice as part of this protection, meaning AI-mimicked voices could trigger lawsuits if used in advertising, monetized media, or branded podcasts.

Passing Off (UK): Under UK law, using someone's voice to suggest false endorsement can lead to civil claims-even if the voice is synthetic-especially if it harms their reputation or brand.

EU Personality Rights: European countries like Germany and France place strong emphasis on personal dignity and image rights. AI-generated impersonations used without consent, even for non-commercial purposes, may still violate these rights if they damage a person's public standing.

Factors causing Legal Risks

AI voice usage is more likely to be illegal if:

- It's used for commercial gain (e.g. ads, brand placements, monetized podcasts)

- There is no clear disclosure that the voice is synthetic

- It mimics a living personand implies their approval or participation

- It's used in deceptive or defamatory contexts

In summary, while laws are still catching up to AI's rapid progress, many jurisdictions already have existing frameworks that can be applied to unauthorized AI voice cloning. The safest path for content creators: always disclose when a voice is synthetic, never use another's voice for commercial gain without permission, and avoid misleading your audience.

Part 3: Best Practices for Podcasters to Use Creative Legality

To help podcasters stay on the right side of the law, here are key guidelines for using AI-generated voices safely and creatively:

1. Always Disclose When a Voice Is AI-Generated

Always inform your audience when a voice is AI-generated. This can be done at the start of the episode or in show notes. Transparency builds trust and ensures compliance with emerging laws like the EU AI Act.

Example: "This episode features voice content created using artificial intelligence for parody and entertainment purposes only."

2. Avoid Imitating Real People

Using AI to mimic a public figure's voice without consent can violate their right of publicity. Even non-commercial content may cause confusion or legal issues if the imitation is too realistic.

3. Keep Commercial Use Separate

Avoid using AI-generated voices that resemble real people in ads, sponsored segments, or monetized shows unless you have permission. Commercial exploitation significantly increases legal risk.

4. Use Tools Designed for Ethical Creation

Choose platforms like HitPaw VoicePea, which offer creative voice modification without encouraging impersonation. Tools with ethical guidelines help reduce misuse and protect creators.

5. Stay Updated on Laws and Policies

Laws around AI content are evolving fast. Follow regional regulations and podcast platform rules, and consult legal experts if your content involves recognizable voices or sponsorships.

Part 4: How to Safely Use HitPaw AI for Voice Changing

AI voice tools like HitPaw VoicePea offer powerful ways to elevate podcast production-whether for narrating stories, modifying vocal tones, or creating stylized characters. But as we've seen, using these tools without clear ethical and legal guardrails can quickly lead to violations of publicity rights or mislead audiences.

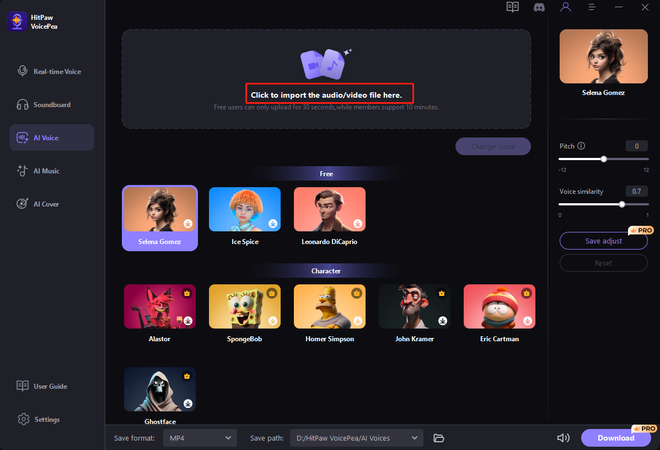

Step 1: Import Audio or Video

Click "AI Voice" and import your audio or video files. HitPaw AI VoicePea supports uploading many formats include: MP4, MP3, WAV, MKV, 3GP, FLAC, WMA, MOV, etc.

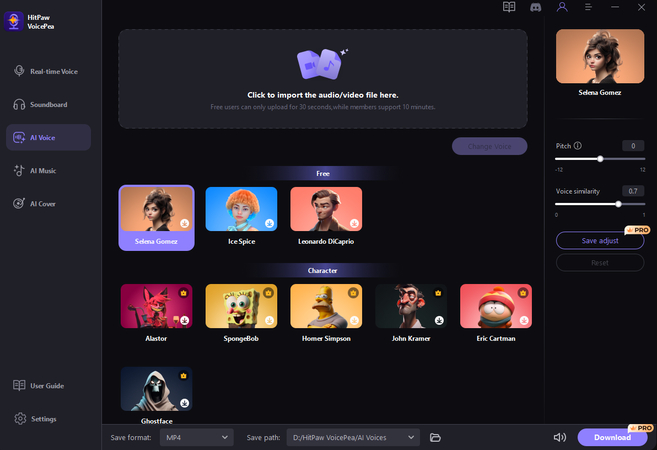

Step 2: Choose AI Voice and Sound Effects

Browse through the available effects, choose any character or celebrity you want, and click to apply.

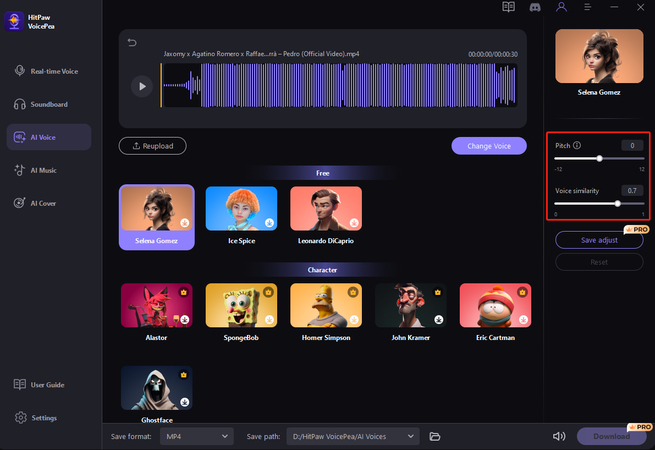

Step 3: Adjust AI Voice Settings

After select your preferred voice effects, you can adjust the pitch and voice similarity.After each parameter adjustment, you need to click 'Change Voice' for the changes to take effect.

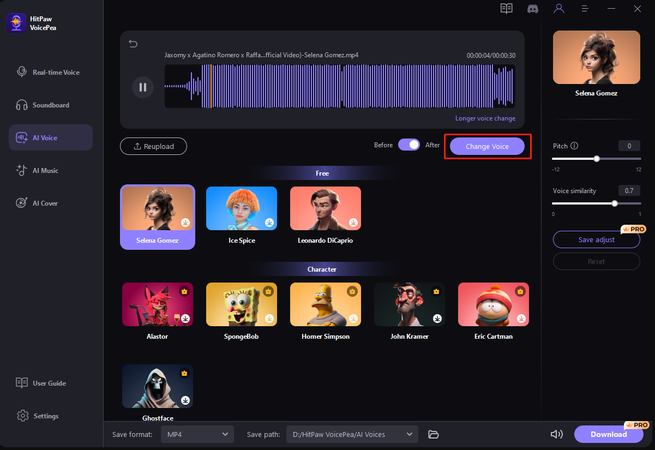

Step 4: Change Podcasts Voice and Export

After adjust voice settings, simply click "change voice" to start the process with AI.

(*Final Reminder: This guide is for informational purposes only and does not constitute legal advice. Before using the relevant features, please make sure to:

① Consult a qualified legal professional in your jurisdiction to evaluate any legal risks that may apply;

② Carefully read and fully understand the HitPaw Term of Service and HitPaw Privacy Policy;

③ Ensure that all generated content complies with applicable local, state, and federal laws and regulations, particularly those related to synthetic media and deepfake technologies.)

Bonus: Global Regulation and Legal Gaps in AI-Generated Audio

While individual countries have begun to address AI-generated content through their own legal systems, there is still no consistent global standard for regulating synthetic voices-especially those that imitate real individuals. This fragmented approach leaves significant legal gray areas for creators, platforms, and regulators alike.

1. United States: A Patchwork of State-Level Protections

In the U.S., there is no federal law that directly governs AI-generated voice cloning. Instead, protections fall under:

- State publicity laws, such as California Civil Code § 3344, which prohibits unauthorized commercial use of a person's "name, voice, signature, photograph, or likeness."

- Recent legislative proposals like the NO FAKES Act, which aims to federally restrict unauthorized AI-generated likenesses-including voices-used in audiovisual or sound recordings.

However, enforcement is inconsistent across states, and not all provide strong protections against non-commercial uses.

2. European Union: Moving Toward Transparency

The EU's Artificial Intelligence Act, passed in 2024, includes strict transparency requirements for "deepfakes" and synthetic content. Creators must clearly disclose when AI is used to generate audio or video content, especially if it imitates a real person.

In addition, EU personality rights-grounded in dignity and data protection-mean that synthetic voice use, even if non-commercial, could trigger legal action if it misrepresents or harms someone's public image.

3. China and Asia-Pacific: Tighter Controls Emerging

China recently issued guidelines under its Regulation on Deep Synthesis Internet Information Services, mandating clear labeling of AI-generated voices and holding platforms accountable for misuse. Japan and South Korea are also evaluating updates to copyright and personal rights laws to cover voice replication.

4. Legal Gaps and Enforcement Challenges

Despite these advances, challenges remain:

- Lack of global consistency: What's legal in one country may be actionable in another.

- Jurisdictional issues: A podcaster in one country may be sued in another if the synthetic voice violates rights internationally.

- Technological enforcement: Platforms still struggle to automatically detect and label AI-generated voices.

This legal patchwork makes it risky for podcasters to use voice cloning without thorough research. As AI tools become more accessible, so too must the regulatory frameworks designed to ensure ethical, transparent use-especially in media and public-facing content.

FAQ: AI Voice Synthesis in Podcasting

Q1. Will I get sued for mimicking a celebrity's voice with AI?

A1. Possibly, yes. If you use a celebrity's voice without permission-especially for commercial gain-it could violate their right of publicity or personality rights. Even parody may not protect you if it's too realistic or misleading.

Q2. Is it legal to use AI voices if I state it's synthetic?

A2. Disclosure helps reduce legal risk and is often required by law or platform policies. However, it doesn't automatically make the use legal-especially if the voice closely resembles a real person and is used commercially.

Q3. Can I monetize podcasts with AI-generated voices?

A3. You can monetize original or fictional AI voices, but using ones that mimic real people without consent is risky. Monetized content has a higher threshold for legal scrutiny.

Q4. Is HitPaw VoicePea legal and safe to use?

A4. Yes, HitPaw VoicePea is a legal and ethical tool when used correctly. It allows users to modify or create voices without encouraging impersonation. Always use it with transparency and avoid cloning real individuals.

Conclusion: Respecting Identity in the Age of Synthetic Sound

AI voice technology is transforming podcasting, offering creators new ways to produce compelling, high-quality content. However, the ability to replicate real voices-especially those of public figures-comes with serious ethical and legal responsibilities. As laws evolve, podcasters must stay vigilant about how synthetic voices are used, particularly in commercial or potentially misleading contexts.

By disclosing AI usage, avoiding unauthorized imitations, and choosing ethical tools like HitPaw VoicePea, creators can explore AI's full potential without violating rights or trust.

HitPaw Univd (Video Converter)

HitPaw Univd (Video Converter) HitPaw VikPea (Video Enhancer)

HitPaw VikPea (Video Enhancer) HitPaw FotorPea

HitPaw FotorPea

Share this article:

Select the product rating:

Daniel Walker

Editor-in-Chief

This post was written by Editor Daniel Walker whose passion lies in bridging the gap between cutting-edge technology and everyday creativity. The content he created inspires the audience to embrace digital tools confidently.

View all ArticlesLeave a Comment

Create your review for HitPaw articles