Unlocking Transparency with Explainable AI: Your Complete XAI Guide

As artificial intelligence continues to drive innovation across industries, a critical challenge has emerged: understanding how complex models reach their conclusions. Explainable AI (XAI) addresses this transparency gap by providing clear, human‑readable insights into machine learning decision processes. By demystifying "black box" algorithms, XAI fosters trust among users, accelerates debugging and improvement, and helps regulatory compliance. In this article, we'll explore what XAI is, why it matters, the core techniques behind it, and practical steps for implementing explainable systems.

Part 1. What Is Explainable AI (XAI)?

Explainable AI, often abbreviated as XAI, refers to methods and tools designed to make the inner workings of machine learning and AI systems understandable to humans. Unlike opaque "black box" models, XAI provides transparent insights into how inputs are transformed into outputs. This transparency empowers stakeholders, developers, end users, and regulators to trust, audit, and improve AI systems more effectively.

Benefits of Explainable AI

- Builds trust. Users gain confidence when they understand how an AI system arrives at its decisions.

- Improves the overall system. Transparency helps developers identify flaws, biases, or inefficiencies in model behavior.

- Identifies cyberattacks. XAI can surface unusual decision rationales, revealing adversarial manipulations of inputs.

- Safeguards against bias. Clear explanations make it easier to spot and correct biased training data or model design choices.

Limitations of Explainable AI

- Explanations may misrepresent complex models, potentially hiding key nuances.

- Lower model performance. Enforcing explainability can sacrifice some predictive accuracy compared to black box alternatives.

- Training issues. Designing models that self‑explain often requires more advanced engineering and longer development cycles.

- Lack of privacy. Revealing internal decision logic may expose sensitive or confidential data.

- Even transparent explanations may not fully convince skeptical users to rely on AI recommendations.

Use Cases for Explainable AI

Healthcare

In medical imaging, XAI helps radiologists see why an AI flagged a possible fracture or tumor, enabling clinicians to verify and integrate AI insights into patient care.

Financial

Banks and lenders use XAI to justify credit decisions, ensuring regulators and customers understand factors behind loan approvals or rejections.

Military

Defense organizations, such as DARPA, invest in XAI to ensure mission‑critical AI systems provide clear rationale for tactical recommendations, boosting operator confidence.

Part 2. Why Do We Need Explainable AI (XAI)?

As AI models become more sophisticated and integral to decision‑making, the need for transparency grows. Stakeholders-from policy makers to end users-demand understandable explanations to ensure fairness, safety, and accountability. Explainable AI bridges the gap between advanced algorithms and human oversight, enabling responsible adoption of AI technologies across critical domains.

- Regulatory compliance in sensitive industries

- Enhanced user adoption and trust

- Faster debugging and model improvement

- Clear accountability for automated decisions

- Ethical alignment and bias detection

- Increased resilience against adversarial attacks

Part 3. What Are Explainable AI (XAI) Techniques?

A variety of techniques exist to shed light on AI decision processes. Some approaches are model‑specific, tailored to certain algorithms, while others are model‑agnostic and can be applied broadly. By selecting the right combination of methods, organizations can achieve the desired level of transparency without unduly sacrificing performance.

- Decision trees. Represent decisions as a tree of if‑then rules that trace how inputs lead to outputs.

- Feature importance. Quantify which input variables most strongly influence the model's predictions.

- Counterfactual explanations. Illustrate "what‑if" scenarios to show how small input changes alter outcomes.

- Shapley additive explanations (SHAP). Attribute each feature's contribution to a prediction based on cooperative game theory.

- Local interpretable model‑agnostic explanations (LIME). Perturb inputs locally and observe output changes to build a simple local surrogate model.

- Partial dependence plots. Depict the relationship between a feature and the predicted outcome by holding other variables constant.

- Visualization tools. Use charts, heatmaps, and dashboards to convey model reasoning in an intuitive way.

Part 4. How Does Explainable AI (XAI) Work?

At its core, XAI works by capturing, interpreting, and communicating the logic of AI models. Techniques range from inherently interpretable algorithms to post‑hoc analysis tools that explain complex models. Beyond these methods, embedding explainability into AI governance, data practices, and model design ensures transparency from start to finish.

Techniques in Action

- Decision trees. Map the complete decision pathway as a branching structure, showing each test and outcome.

- Feature importance. Rank variables to highlight which data points drive predictions.

- Counterfactual explanations. Offer scenario‑based insights into how slight input shifts change results.

- Shapley additive explanations. Combine all feature contributions to deliver a fair, unified explanation.

- Generate simple linear models around individual predictions to spotlight local behavior.

- Partial dependence plots. Chart how gradual feature adjustments influence output values.

- Visualization tools. Present model reasoning through interactive graphs and user‑friendly dashboards.

Core Approaches to XAI System Design

- Establish an AI governance board to define explainability standards and ensure compliance.

- Curate unbiased, high‑quality training data; remove irrelevant or sensitive attributes before training.

- Design models that annotate each prediction with its data sources and reasoning steps.

- Favor layered, interpretable algorithms or augment complex models with explanation modules.

Further Reading: Enhance Your Videos with HitPaw VikPea Video Enhancer

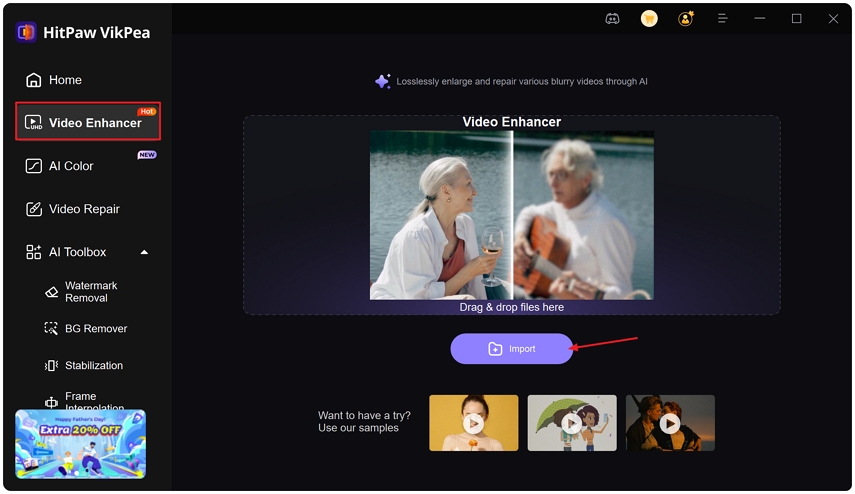

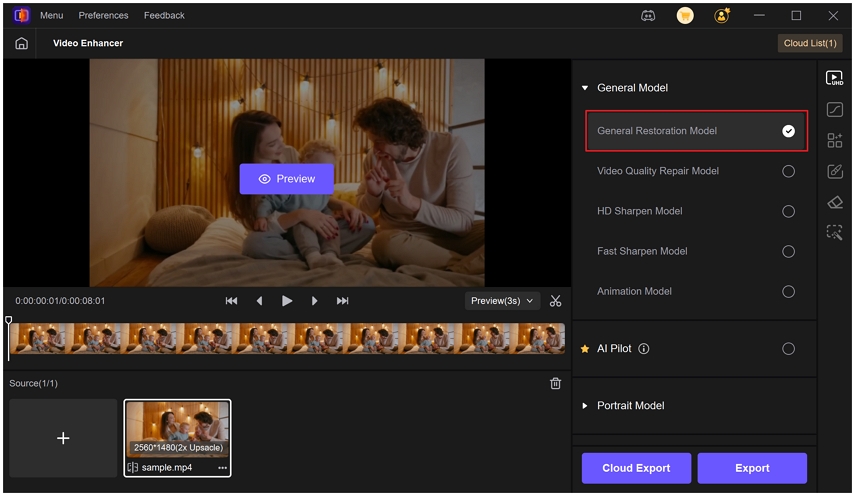

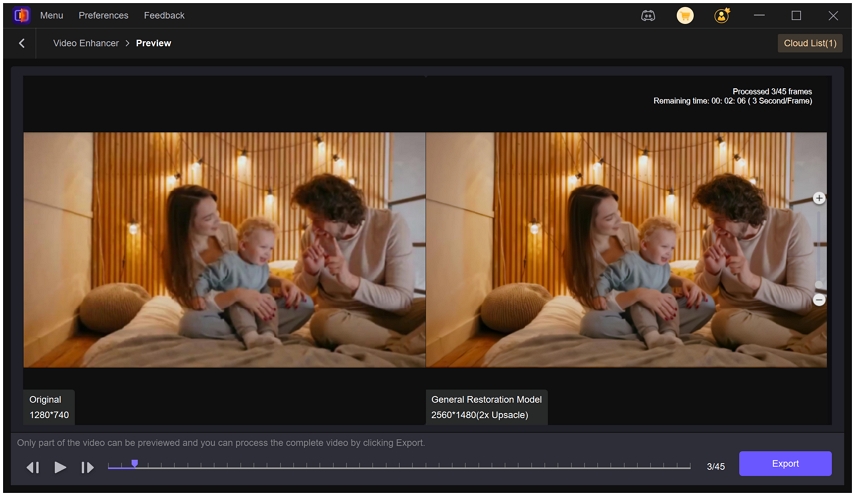

While XAI empowers transparency in AI models, HitPaw VikPea video enhancer harnesses AI to elevate your video quality. By offering multiple specialized models, it restores clarity, sharpens details, and upscales footage to modern resolutions. Whether you're working with old family videos or low‑res web clips, VikPea's intuitive interface and powerful AI pipelines make professional‑grade enhancement accessible to everyone.

- AI‑powered general model restores overall video clarity and color fidelity.

- Sharpen model enhances edge definitions for crisp, well‑defined visuals.

- Portrait model improves facial detail and smooths textures in human subjects.

- Video quality repair model fixes compression artifacts and noise in low‑quality clips.

- Batch processing enables simultaneous enhancement of multiple videos with a single click.

- 4K and 8K upscaling delivers ultra‑high‑definition output for modern displays.

Step 1.Download and launch. Install HitPaw VikPea and open it on your computer. Drag and drop your video or click "Choose file".

Step 2.Select AI model. Choose from General, Sharpen, Portrait, or Video Quality Repair based on your enhancement goals.

Step 3.Export settings. Pick your desired resolution (up to 8K), preview the result, and click "Export" to save your enhanced video.

Conclusion

Explainable AI (XAI) transforms opaque algorithms into transparent, accountable systems that build trust, improve performance, and guard against bias and adversarial threats. By understanding its benefits, methods, and best practices, organizations can deploy AI responsibly and effectively. And whether you're refining AI models or elevating multimedia content, tools like HitPaw VikPea Video Enhancer showcase the power and versatility of modern AI solutions.

HitPaw Univd (Video Converter)

HitPaw Univd (Video Converter) HitPaw VoicePea

HitPaw VoicePea  HitPaw FotorPea

HitPaw FotorPea

Share this article:

Select the product rating:

Daniel Walker

Editor-in-Chief

This post was written by Editor Daniel Walker whose passion lies in bridging the gap between cutting-edge technology and everyday creativity. The content he created inspires the audience to embrace digital tools confidently.

View all ArticlesLeave a Comment

Create your review for HitPaw articles