ComfyUI WAN2.2: Features, Workflows, and Video Generation Guide

AI video generation has now gone far beyond brief experiments, and ComfyUI WAN2.2 is a significant advancement. The ComfyUI node-based system is based on this and includes text-to-video, image-to-video, and hybrid workflows, which can be used to produce high-quality temporally coherent videos that adhere to prompts. The main innovation it has is the sophisticated Mixture of Experts (MoE) architecture that allocates work to specific models to provide a more fluent motion, motion cinematic effects, and greater semantic accuracy. This makes WAN2.2 suitable for storytelling, animation, marketing and experimental pipelines. To simplify more intricate configurations, most developers rely on the comfyui-wanvideowrapper and post-processing software such as HitPaw VikPea is frequently utilized to improve sharpness, color balance and general visual result.

Part 1: What is ComfyUI WAN2.2 and Core Capabilities

Overview of ComfyUI WAN2.2 Model

WAN2.2 is an AI video model next generation with a specific adaptation to the modular workflow system of ComfyUI. A Mixture of Experts (MoE) architecture that delegates various generation tasks to specialized internal components is used in WAN2.2 unlike the traditional frame-by-frame generators. It is also characterized by the usage of dual noise experts that enormously enhance frame continuity and motion flow in a video sequence.

This in-architectural design enables WAN2.2 to manage complicated motion patterns, film camera movement and elaborate composition of scenes. The model has strengths especially in preserving semantic consistency, i.e. the consistency of characters, the environment, and actions according to the original prompt over time. Because of this, videos become more purposeful and film-like as opposed to disorganized and discontinuous.

WAN2.2 Versions in ComfyUI

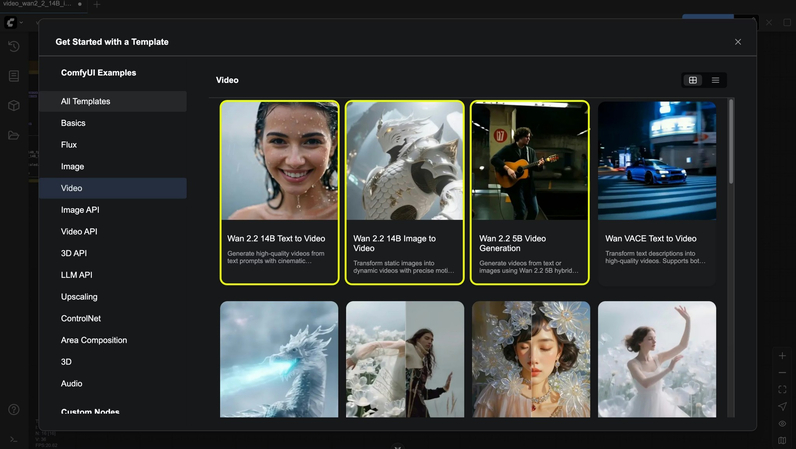

In ComfyUI, WAN2.2 offers the various types of workflow to suit the different creative requirements. The most frequently used are text-to-video, image-to-video and first last frame interpolation workflows. Combining text prompts with image constraints are also possible hybrid designs and find the balance between creative freedom and visual control.

Such flexibility gives a user the ability to select workflows depending on project objectives, whether they are creating new content or just animating some existing images.

Part 2: Installing and Setting Up ComfyUI WAN2.2

ComfyUI Requirements

In order to operate WAN2.2, it is highly advisable to use the latest version of ComfyUI because the older ones can produce incompatibility problems or lack some features. Hardware wise, a current day GPU is a must. WAN2.2 is capable of working with GPUs that have approximately 12 GB of VRAM, and 16 GB or greater is more consistently stable, and has a higher video output. Reliable CUDA support is a common choice in the NVIDIA GPUs. SSD storage fastest and having enough bandwidth of system RAM also aids in the reduction of bottlenecks in the generation of large frame sequences.

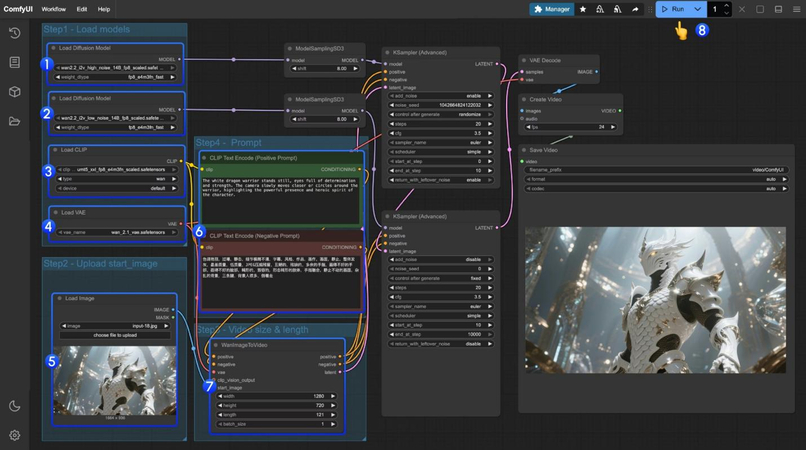

Workflow Templates and Model Files

The majority of users start with the importation of already created workflow templates of WAN2.2 into ComfyUI. All the necessary nodes in sampling, decoding and frame sequencing are included in these templates, which minimizes setup errors and saves time. WAN2.2 workflows are based on a variety of model files such as the main diffusion model, a compatible VAE and text encoders. Text to video and image to video workflow are normally separated. After being placed in the right directories, workflows will load consistently and will be available to be customized.

WanVideoWrapper Integration

To be managed easier, the creators resort to ComfyUI WanVideoWrapper used by numerous creators. It lumps the parameters which are often changed, maintains the node graphs tidy and standardizes behavior in WAN2.2 workflows. This enables quicker iteration, simpler testing and simpler utilization by the community presets by both beginners and advanced users.

Part 3: Core WAN2.2 Workflows in ComfyUI

1. Text-to-Video (T2V) Workflow

Given written instructions, the text-to-video workflow will produce entire sequences of video. Once a WAN2.2 T2V template has been loaded, the user specifies such parameters as the number of frames and the resolution, and records prompts that correspond to the description of scenes, motion, camera movement, and style. WAN2.2 translates this code into fluently flowing motion with healthy lighting and behavior of the subject and often yields cinematic results. T2V suits well when dealing with conceptual visuals, narrative concepts and creative experimentation.

2. Image-to-Video (I2V) Workflow

The picture-to-video picture processing begins with a frozen image and this serves as a visual anchor. WAN2.2 shows the animation of the image in time with a timely instruction as opposed to noise generation. It works best where identity of character, branding or aspect character are of importance, and so it is often used to animate portraits, illustrations and product visuals.

3. First-Last Frame (Fun InP) Workflow

This workflow works with two pictures to specify the beginning and the end of a video. The in-between frames are produced through WAN2.2 which makes a smooth transition. It is effective with transformations, comparisons and structured and story-driven animations.

4. Optional Advanced Workflows

In addition to regular workflows, WAN2.2 may be augmented to more sophisticated ComfyUI configurations. These are control-based workflows, which are directed by pose or depth data, and experimental audio-driven pipelines, in which sound can be used to control movement or schedule. Although these workflows are less user-friendly, they illustrate the flexibility of WAN2.2 in the modular system of ComfyUI.

Part 4: Practical Usage Scenarios of WAN2.2 in ComfyUI

1. Creative Video Generation

WAN2.2 is more popular in artistic and narrative projects. Producers use its movie features to create short movies, cartoons, and experimental images that would otherwise have been time-consuming to make in real life by hand drawing or shooting.

2. Content Marketing and Social Media

Wan2.2 comfyui can be used in marketing promotional clips, social media images, and brand storytelling content as it allows creating content faster. The workflows can be iterated fast, using minimal or no-cost production infrastructure, by running on a basis of prompt.

3. Animation and Custom Control

Animators benefit from WAN2.2's first-last frame and control-based workflows, which allow for precise manipulation of motion and transitions. These features make it easier to integrate AI-generated footage into larger animation pipelines.

4. Audio-Driven Video

Audio-driven workflows allow creating innovative creative opportunities, allowing visual synchrony of music, dialogue, or performance-based work. This is especially attractive to musicians, digital artists, and experimental artists of media.

Part 5: Enhance Videos Created by ComfyUI WAN2.2 with VikPea

Even the sophisticated models, such as WAN2.2, tend to need post-processing in the case of AI-generated videos. Such problems as slight flickering, lack of details sharpness, uneven colors, and perceivable noise of darker scenes are typical. Improvement of these outputs will make them comply with professional standards.

HitPaw VikPea solves these problems by resorting to AI-driven enhancement tools that are explicitly designed to handle video enhancement. The features allow to refine the raw AI-generated footage to the level of visual polish that is appropriate to use in a professional context.

Key Features of HitPaw VikPea

- AI Video Generator: Create videos from text, images, or creative effects.

- AI Video Repair: Corrupted, damaged, or unplayable video files have to be fixed.

- Colorize Video: Colorize faded or black-and-white videos naturally

- Enhance Video to 4K: Upgrade video resolution to 4K for sharper, clearer, and more professional-looking content.

- User-Friendly Operation: Intuitive interface requiring minimal technical expertise for professional results.

- Batch processing: Enables enhancement of multiple videos simultaneously.

- Preview functionality: Allows real-time comparison between original and enhanced footage.

How to Enhance AI Generated Videos by ComfyUI WAN2.2 with HitPaw VikPea

Step 1: Install and Download

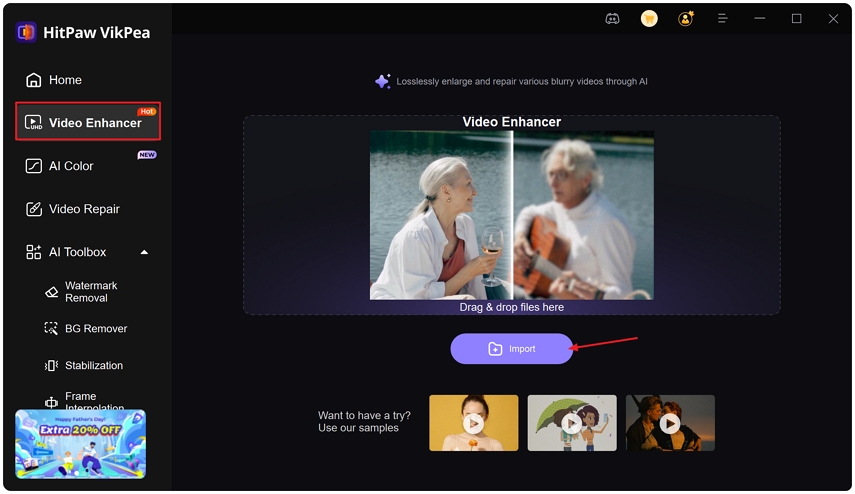

Go to the official website and download HitPaw VikPea. After it is installed, start the application and log in when it is necessary.

Step 2: Get Your Footage into Video Enhancer

Click on the left panel to open the Video Enhancer module. Press the icon to import your video file into the interface.

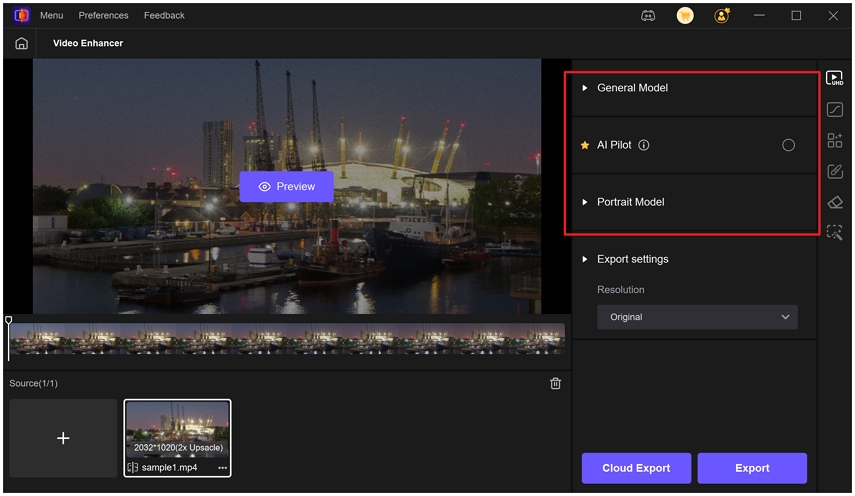

Step 3: Use the Appropriate AI Model

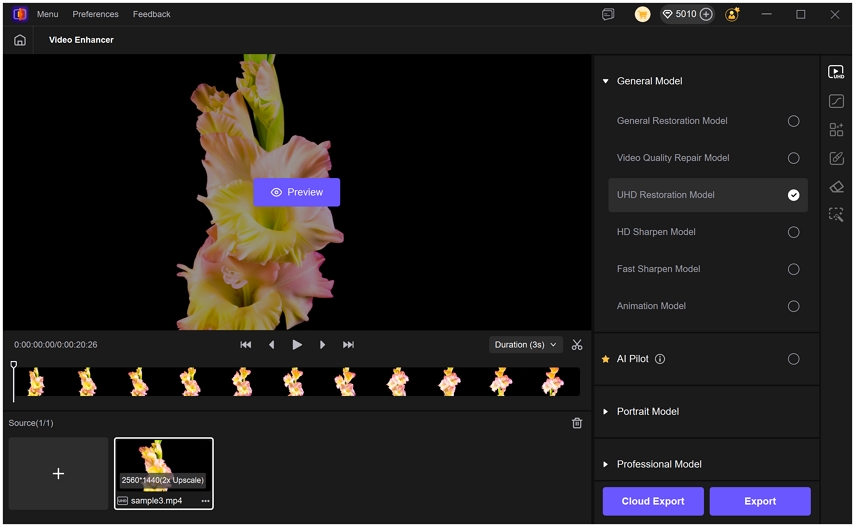

Along with a general model that applies enhancement overall, there are multiple specialized models that can be applied to the video as per particular enhancement needs.

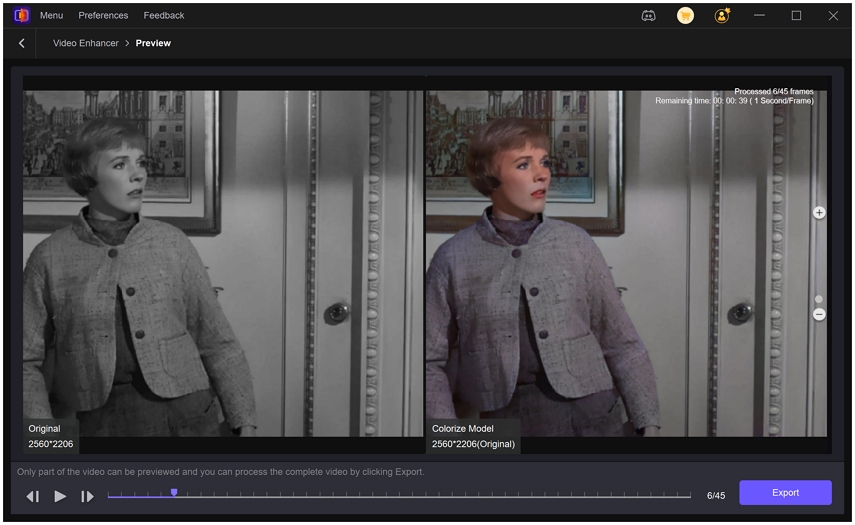

Colorize Model adds a realistic color to the videos in black-and-white or videos that have faded.

You can also apply other models like UHD Restoration Model that will further improve video quality of a high resolution 720p video, enhancing visibility and restoring sharpening.

Choose your preview length (3 or 5 sec). In case you need to improve only a few elements of the video, use the Cut tool. Fix the output resolution and format.

Tips: In case you are not sure what model to use, use AI Pilot. It will automatically examine your video and advise the most suitable enhancement.

Step 4: Preview and Save

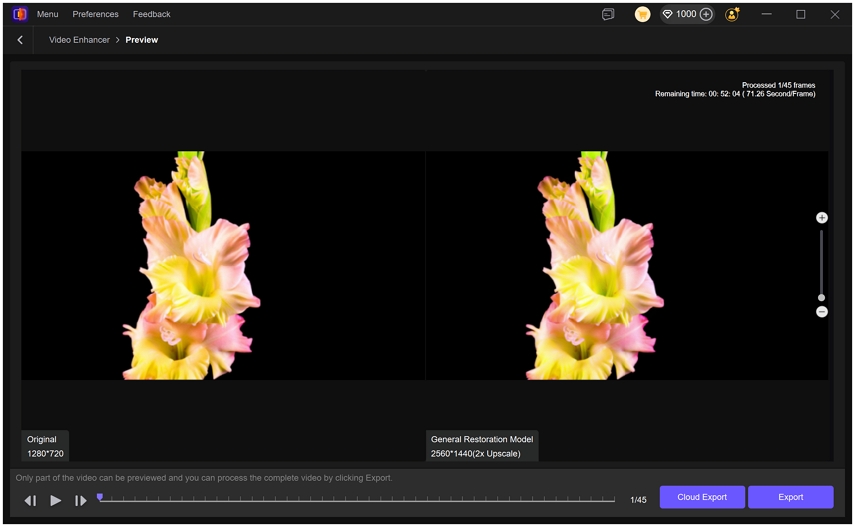

After making all necessary adjustments, click on Preview to compare the before-and-after results of your video. This lets you clearly see the difference between the original and the enhanced version before finalizing.

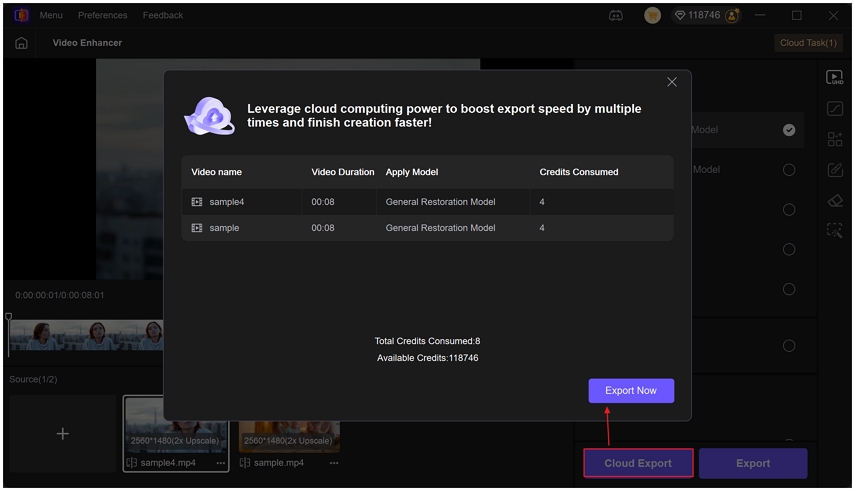

Step 5: Export or Cloud Export

Once satisfied with the preview, select Export or Cloud Export to save your video. Enjoy enhanced videos with stunning clarity.

Workflow Integration Summary

The workflow is simple and consists of: creating video in ComfyUI, exporting it, improving it in VikPea, and exporting the created version. The overall effect of the process is an increase in visual quality, smooth playback, and a more professional look, which can be used to promote, sell, or show a portfolio.

FAQs about ComfyUI WAN2.2

It is advised to have a contemporary GPU with a minimum 12 GB of VRAM and a 16GB or higher to ensure a higher performance with long videos.

WAN2.2 is typically available under Apache 2.0, but can be used commercially. Certain terms of models should also be overlooked to make sure compliance.

Text-to-video is created based on pure prompting, and image-to-video is based on animating an already existing image which provides a more powerful visual consistency.

Yes. Novices should use templates, comfyui wanvideowrapper and visit community materials to gain knowledge.

Conclusion

ComfyUI WAN2.2 has already become a strong tool in terms of high-quality AI video creation. Its progressive design, customizable workflow and high semantic precision allow creators to generate the outcomes of the film, right in ComfyUI. Wan2.2 comfyui workflow options can be used to tell stories, to market, and to animate and provide creative opportunities that are extensive.

Developers can refine AI-generated video with HitPaw VikPea to reach a state where it can be used in the real world as highly refined and professional.

Leave a Comment

Create your review for HitPaw articles