Wan AI Wan 2.2: The Next Evolution in Multimodal AI Creation

Wan 2.2 marks a milestone in multimodal AI creativity. Built by Alibaba's Wan AI team, this open-source model leverages its Mixture-of-Experts architecture with 27 billion total and 14 billion active parameters to generate cinematic-quality video at 720p or even 1080p and 24 fps on consumer GPUs like the RTX 4090. It supports both text-to-video and image-to-video workflows, combines high-compression VAE techniques for efficiency, and enhances performance through expanded training data compared to Wan 2.1.

In this article, you'll explore Wan 2.2's key breakthroughs, creative enhancements, core applications, usage guide, and recommended video-enhancement tools, everything you need to harness its full potential.

Part 1. Introduction to Wan 2.2: What are the Technical Breakthroughs?

Wan 2.2 represents a major leap forward from Wan 2.1 in multimodal AI creation. It blends advanced architectural innovations and creative capabilities into a cohesive toolkit, enabling generation of cinematic-quality video from text or images, all with efficient resource requirements and open source accessibility.

Technical Breakthroughs in Wan 2.2

- Multimodal input: Supports text-to-video (T2V - A14B) and image-to-video (I2V - A14B), enabling video generation from textual prompts or uploaded images.

- High-resolution video generation: Produces 720p HD video at 24 fps (also 480p). For example, the T2V-1.3B model runs on consumer GPUs with ~8 GB VRAM and generates a 5-second 480p video on an RTX 4090 in approximately 4 minutes.

- Audio-visual lip sync: Generates realistic audio with precise lip-shape synchronization and smart prompt extension for more vivid, lifelike video experiences.

- Architectural advantages: Built with ~27 billion total parameters (14 billion active per step) using MoE (Mixture-of-Experts), Flash Attention 3, and high-compression VAE (~64x). MOE splits tasks across experts specialized in aspects like motion, lighting, scene composition, ensuring fast inference.

Part 2. Key Functions and Highlights of Wan AI - Wan 2.2

Wan 2.2 delivers more than impressive technical specs, it refines creative control across image, video, effects, and workflows. With cinematic aesthetics, optimized motion consistency, custom training support, cross-modal fluency, and smarter tools, it enables creators across fields from illustrators to animators to produce higher quality content with greater ease and precision.

Image Generation: More Refined, More Controllable

Wan 2.2 enhances detail rendering especially in textures like skin, fabric, and landscapes, while offering precise style control (lighting, saturation, composition). Smart composition suggestions further help optimize layouts, ideal for fast, project-specific character or concept art.

Video Generation: Smoother Motion Effects

Motion in Wan 2.2 is more fluid and realistic. It notably improves temporal consistency to reduce flickering, supports smoother transitions between scenes, and handles longer, higher-resolution clips more efficiently, perfect for short-form creators or storyboard-driven workflows.

Special Effects: More Realistic and Diverse

Wan 2.2 supports cinematic-level lighting controls, realistic particle effects like smoke or fire, stylized filter presets, and automatic effect suggestions based on prompts. These features are especially useful for game devs or multimedia artists seeking dramatic visuals.

LoRA Training: More Efficient and Precise

Wan 2.2 includes streamlined support for LoRA fine-tuning. It enables faster training speeds, few-shot learning with only 10-20 images, and more intuitive visual interfaces for parameter tuning fitting studios that need consistent art-style generation across projects.

Cross-Modal Creation: Seamless Integration

The model bridges image and video generation: static images can be animated (wind-blown foliage, moving characters), and still frames can be extracted from videos with style preservation. This maintains visual consistency across campaign elements.

Creative Assistance: Smarter Tools

Wan 2.2 adds intelligent creative features such as real-time parameter previews, expanded preset libraries (anime, realism, ads), and smarter default suggestions. These accelerative tools help brainstorm and iterate faster.

Part 3. Application Scenarios of Wan AI - Wan 2.2

Wan 2.2's combination of high-quality video/image generation and flexible control makes it suitable for numerous professional use cases. Whether in marketing, education, creative arts, ecommerce, or business, the model streamlines visual storytelling and content production.

- Marketing & Advertising: Create engaging promotional videos, product demos, and social media content to boost brand visibility.

- Education & Training: Produce educational materials, tutorials, and training videos in a vivid visual format.

- Creative Content: Ideal for music, art, and storytelling, turn artistic visions into reality with enhanced inspiration.

- Ecommerce: Show products dynamically via video to attract consumer attention and drive sales.

- Business Presentations: Convert slide decks into engaging videos for more compelling presentations.

Part 4. How to Use Wan AI - Wan 2.2 to Generate Video from Image?

Getting started with Wan 2.2 is simple and accessible. Whether you download the model from GitHub or use ComfyUI's built-in example templates, you can begin generating cinematic-quality videos from text or images quickly. With step-by-step workflow instructions, the process is streamlined and the resulting MP4 output can be immediately edited or published.

Steps to Use image-to-video with the Wan 2.2 14B model

This workflow turns an input image into a video. It requires 20 GB VRAM and takes about 1 hr 20 mins on an RTX4090 GPU card.

Step 1: Update ComfyUI

Open ComfyUI Manager via the top toolbar and select Update ComfyUI. Restart ComfyUI to ensure you have the latest version and template support.

Step 2: Load the Wan-2.2 Workflow

Navigate to Workflow > Browse Templates > Video > "Wan2.2 14B I2V". Alternatively, download the official Wan 2.2 JSON workflow and drag it into the ComfyUI canvas.

Step 3: Download and Organize Model Files

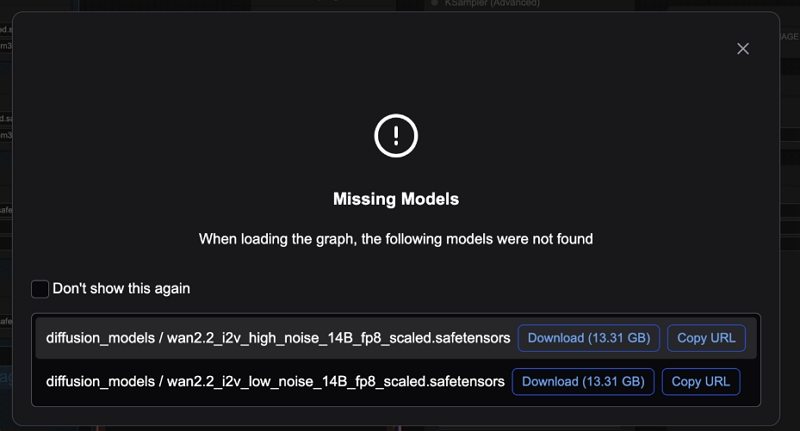

After loading the workflow JSON file, ComfyUI should prompt you to download the missing model files.

Here are what you need to download:

- Download wan2.2_i2v_high_noise_14B_fp8_scaled.safetensors and put it in ComfyUI > models > diffusion_models.

- Download wan2.2_i2v_low_noise_14B_fp8_scaled.safetensors and put it in ComfyUI > models > diffusion_models.

- Download umt5_xxl_fp8_e4m3fn_scaled.safetensors and put it in ComfyUI > models > text_encoders.

- Download wan_2.1_vae.safetensors and put it in ComfyUI > models > vae.

Step 4: Ensure Correct Model Loading in Nodes

In the workflow nodes:

- Set the first Load Diffusion Model node to the high-noise model

- Set the second to the low-noise model

- Ensure the Load CLIP (text encoder) node uses umt5_xxl_fp8...

- Ensure the Load VAE node uses wan_2.1_vae.safetensors

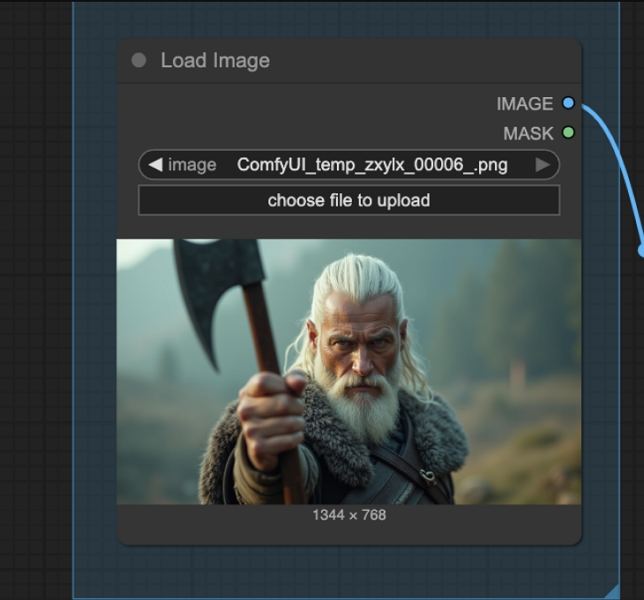

Step 5: Upload Input (Image or Start Frame)

Use the Load Image node to upload the image that will serve as the first frame of the video.

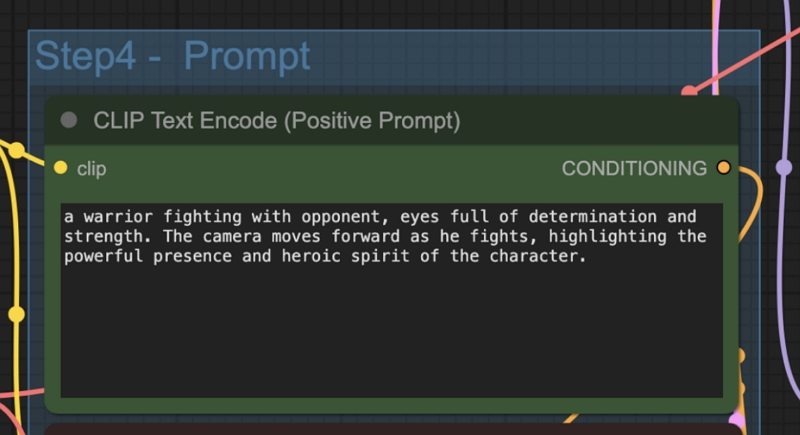

Step 6: Revise Prompt and Customize Settings

Edit both positive and negative prompts via the CLIP Text Encoder node. Optionally adjust video size and frame count using the EmptyHunyuanLatentVideo node settings (e.g., dimensions, length).

Step 7: Run the Workflow and Generate Video

Press the Run button or use Ctrl (or Cmd) + Enter to start generation. ComfyUI will process and render the video sequence into MP4 format.

Tips for Using Wan AI - Wan 2.2 from the Community

- Native workflow within ComfyUI avoids external wrapper nodes and supports both T2V and I2V modes installable via ComfyUI Manager.

- For lower-VRAM setups, consider the 1.3B model variant, suitable even for 8 GB cards (e.g., RTX 3060).

- If the ComfyUI interface shows red or missing nodes, run ComfyUI Manager → Install Missing Custom Nodes, especially for Kijai's WanVideoWrapper or related helper suites.

- Use TeaCache or Sage Attention modes to speed generation and reduce memory usage on larger GPUs.

Video Tutorial on Wan 2.2 VS Google Veo 3 | How to Use Wan 2.2 Free - Open Source AI Video Generator

Further Reading. Enhance Your Generated Video Output with HitPaw VikPea

To polish videos generated by Wan 2.2, HitPaw VikPea offers AI-powered enhancement tools that elevate output quality to professional standards. Whether you're refining a cinematic clip or restoring low-resolution footage, VikPea excels at sharpening, denoising, color correction, and upscaling supporting resolutions up to 8K.

- AI Pilot model automatically recommends the ideal enhancement model for your content.

- Face and Portrait Enhancer improves facial clarity and restores details with precision.

- 4K-8K Upscaling transforms low-res clips into sharp high-resolution video.

- Color Enhancement & SDR to HDR boosts vibrance and tone dynamically.

- Low-light Enhancement brightens dark scenes without overexposing highlights.

- Video Repair & Background Removal fixes glitches and removes unwanted elements.

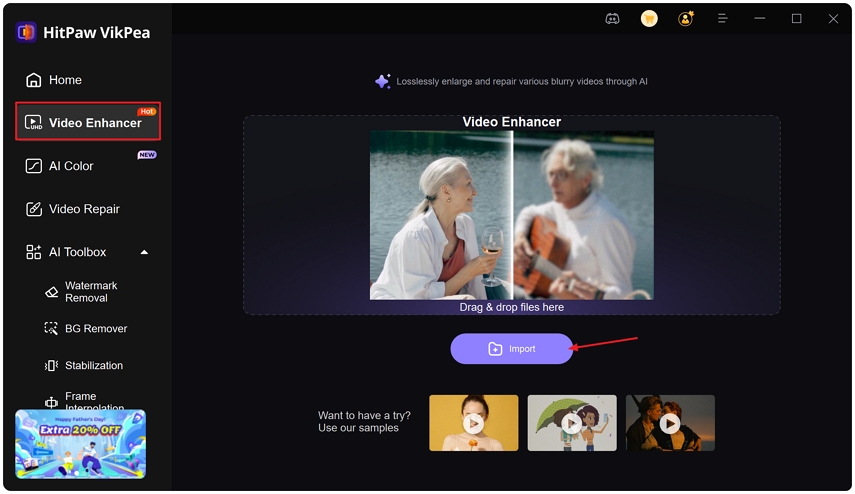

Step 1.Install the VikPea Video Enhancer on Windows, Mac, or mobile platforms. Import your Wan 2.2-generated video via "Choose file" or drag-and-drop.

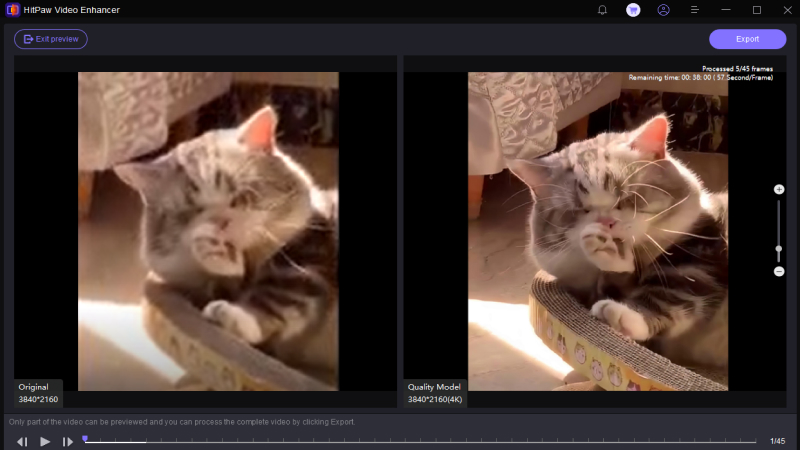

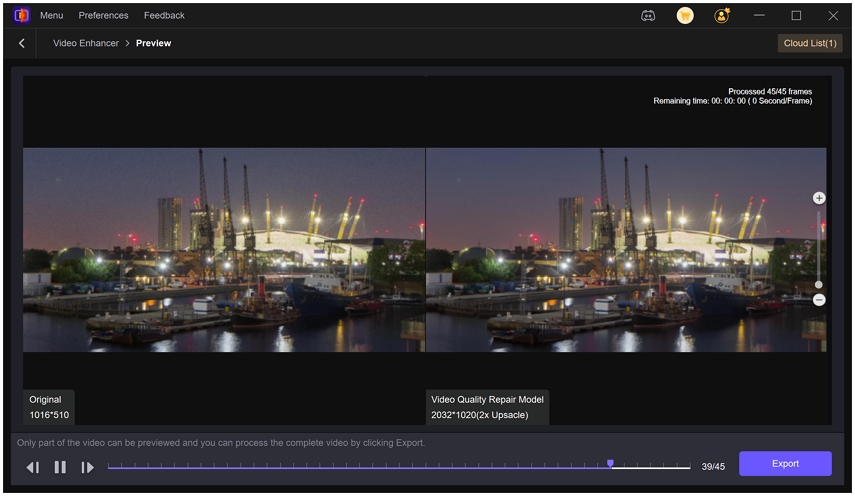

Step 2.Select a model to enhance video. This software offers mutiple AI models such as General Model, Sharpen Model, Potrait Model and Video Quality Repair Model. Select a suitable models based on your needs.

Step 3.Preview enhancements on 3-5 second clips before full export. Export to final video in up to 8K resolution if licensed.

Conclusion

Wan AI's Wan 2.2 marks a pivotal step in multimodal AI creation. With technical breakthroughs like MoE architecture, high-resolution output, synchronized audio-video, and efficient LoRA training, plus refined creative functions and cross-modal capabilities, it empowers creators across marketing, education, art, ecommerce, and more. Fully open source and accessible, Wan 2.2 offers an efficient, flexible toolkit for generating cinematic content. Paired with tools like HitPaw VikPea, it enables pro-level video polish. Whether you're a professional or an enthusiast, Wan 2.2 may transform how you generate and deliver visual narratives.

Leave a Comment

Create your review for HitPaw articles