Best GPU for AI: How to Choose the Right One for Your Work

The landscape of Artificial Intelligence has shifted rapidly. Whether you are training Large Language Models (LLMs) or a creator looking to enhance 4K video, choosing the best GPU for AI is the single most important hardware decision you will make. In 2026, the market is more diverse than ever, with specialized silicon designed to handle everything from massive neural networks to real-time generative art.

Part 1. What Makes a GPU Good for AI?

Not all graphics cards are created equal when it comes to machine learning. To find the best graphics card for AI, you need to look past gaming benchmarks and focus on "Compute" metrics.

Key Specs That Matter for AI

- CUDA / ROCm Support: NVIDIA's CUDA remains the industry standard, making a NVIDIA GPU for AI training the most compatible choice for most libraries like PyTorch and TensorFlow.

- VRAM Size (8GB / 12GB / 24GB+): Video RAM determines the size of the model you can load. For gpus for deep learning, 24GB is the "sweet spot" for local workstations, while enterprise tasks require 80GB+.

- Tensor Cores / AI Accelerators: These are specialized hardware units designed for matrix multiplication-the "math" of AI.

- Memory Bandwidth: High bandwidth (measured in GB/s) ensures data flows quickly between the memory and the cores, preventing bottlenecks.

- Power & Cooling Considerations: AI tasks often run at 100% load for hours or days; robust thermal management is essential.

Training vs. Inference vs. AI Creation

- Training: Requires the highest VRAM and multi-GPU scalability (NVLink) to build models from scratch.

- Inference: Running a pre-trained model. Prioritizes stability and cost-per-query.

- AI Creators: Focus on best AI GPU performance within creative software. These users benefit most from GPU acceleration in user-friendly tools that handle video and image enhancement.

Part 2. Best GPUs for AI by Use Case (2026)

Selecting the best GPU for AI requires matching your specific workload to the right hardware tier. Here are the top contenders for 2026.

1. Best GPU for AI Training (Professional & Enterprise)

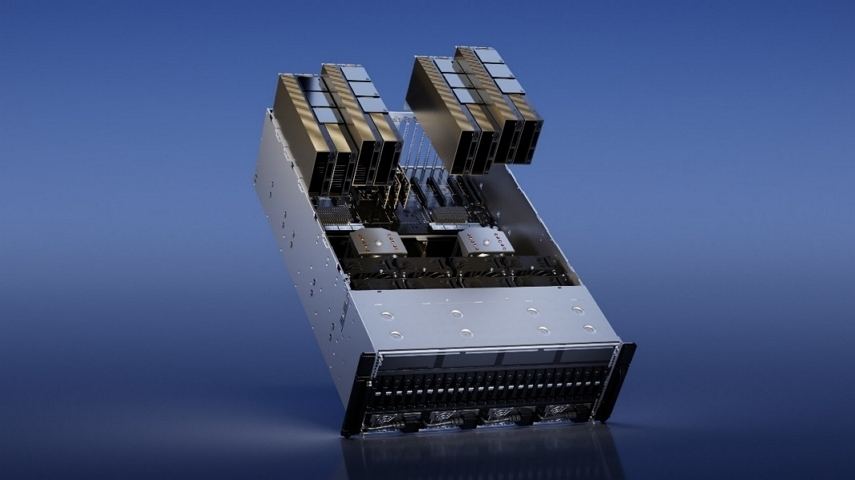

NVIDIA H100 / H200 Tensor Core

The H-series represents the pinnacle of NVIDIA GPU for AI training. The H200, specifically, is a refinement of the H100 architecture, offering nearly double the capacity for Large Language Models (LLMs).

Ideal for: Data centers, research institutions, and companies training billion-parameter models from scratch.

Key Specs:

- Launch Date: H100 (Oct 2022) / H200 (Nov 2024)

- Architecture: Hopper

- Memory: Up to 141GB HBM3e (H200)

- Memory Bandwidth: 8 TB/s

- Tensor Core Performance: ~3,958 TFLOPS (FP8 with Sparsity)

- Power (TDP): Up to 700W (SXM) / 350-600W (PCIe)

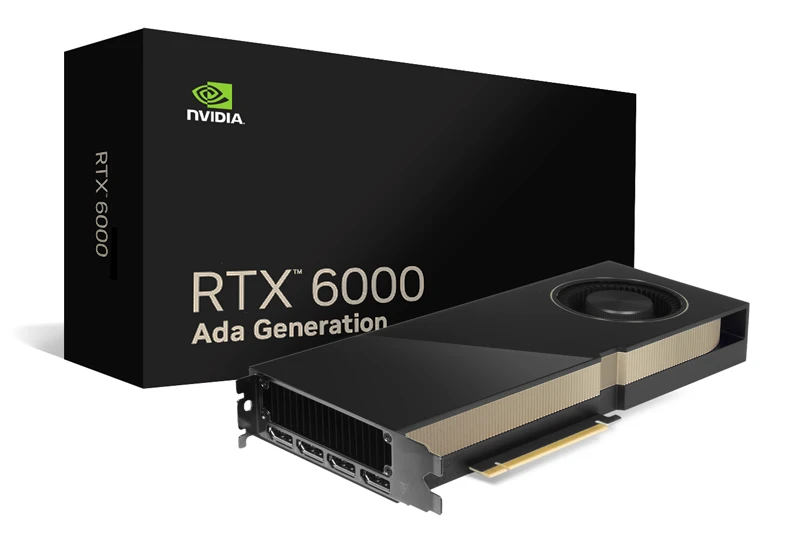

RTX 6000 Ada Generation

This is the ultimate professional workstation card. It packs nearly the same "brain" as the RTX 4090 but with double the VRAM and professional driver support.

Ideal for: AI researchers and engineers needing high VRAM on a local workstation for fine-tuning.

Key Specs:

- Launch Date: Dec 2022

- Architecture: Ada Lovelace

- Memory: 48GB GDDR6

- Memory Bandwidth: 960 GB/s

- Tensor Core Performance: 1,457 TFLOPS (Sparse)

- Power (TDP): 300W

2. Best GPU for AI Inference & Deployment

NVIDIA RTX 4090

Widely considered the best graphics card for AI for consumers, the 4090's 24GB of VRAM makes it the standard for local LLM inference.

Ideal for: Independent AI developers and high-end power users.

Key Specs:

- Launch Date: Sept 2022

- Architecture: Ada Lovelace

- Memory: 24GB GDDR6X

- Memory Bandwidth: 1.01 TB/s

- Tensor Core Performance: 1,321 TFLOPS (Sparse)

- Power (TDP): 450W

RTX 3090 (The Value Workhorse)

In 2026, the 3090 remains a top choice for GPU for deep learning because it offers 24GB of VRAM at a fraction of the cost of 40-series cards.

Ideal for: Students and budget-conscious researchers.

Key Specs:

- Launch Date: Sept 2020

- Architecture: Ampere

- Memory: 24GB GDDR6X

- Memory Bandwidth: 936 GB/s

- Tensor Core Performance: 564 TFLOPS (Sparse)

- Power (TDP): 350W

3. Best GPU for AI Creators (Video, Image & Generative AI)

NVIDIA RTX 4070

The RTX 4070 is one of the most balanced choices for AI creators. It provides enough VRAM and Tensor Core performance to handle AI video upscaling, image enhancement, and generative AI workflows efficiently.

Best for:

- AI video enhancement

- Image and animation generation

- Content creators

Key Specs:

- Launch Date: April 2023

- Architecture: Ada Lovelace

- Memory: 12GB GDDR6X

- Memory Bandwidth: 504 GB/s

- Tensor Core Performance: 466 TFLOPS (Sparse)

- Power (TDP): 200W

RTX 4060 Ti (16GB Version)

The RTX 4060 Ti is an entry-level option for AI creators and beginners. While limited in VRAM, it still delivers solid AI acceleration for lighter workloads such as AI image enhancement and short video processing.

Best for:

- Entry-level AI creators

- Learning AI workflows

Key Specs:

- Launch Date: May 2023

- Architecture: Ada Lovelace

- Memory: 16GB GDDR6

- Memory Bandwidth: 288 GB/s

- Tensor Core Performance: 353 TFLOPS (Sparse)

- Power (TDP): 165W

Part 3. Pro Tip: Accelerate AI Tasks with GPU-Optimized Software

While having the best GPU for deep learning is a great foundation, the software you use determines your actual productivity. For creators specifically, hardware performance can often be bottlenecked by poorly optimized tools.

VikPea is an AI-powered video enhancement suite that is fully optimized for GPU acceleration. Whether you are using an RTX 4090 or a more modest 4060 Ti, VikPea utilizes the Tensor Cores of your NVIDIA card to process tasks up to 30x faster than traditional CPU-based software.

HitPaw VikPea Key Features:

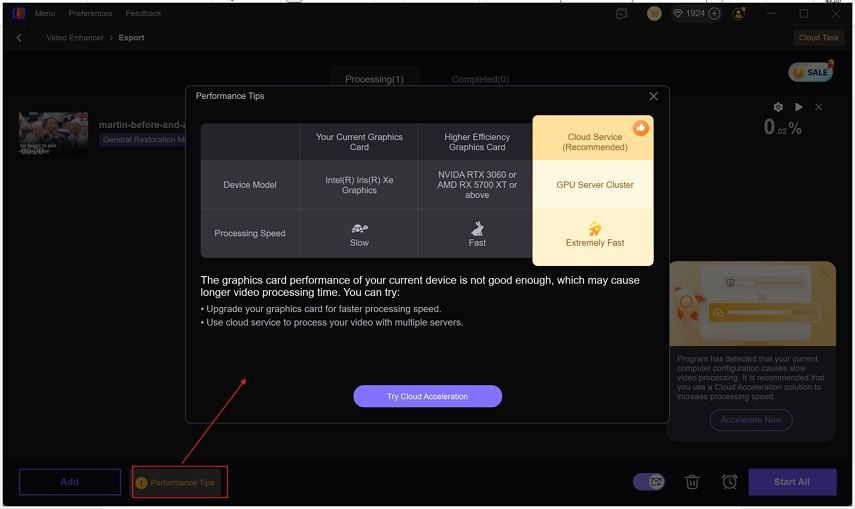

- Hybrid Power: It supports both local GPU acceleration and cloud computing, allowing you to offload heavy tasks when your local hardware is busy.

- 4K Video Upscaling: Transforming grainy 1080p footage into cinematic 4K has never been faster.

- Targeted AI Models: Vikpea features specialized models, such as General model for overall clarity; Denoise model to remove visual noise from low-light footage; Portrait model to enhance facial details and skin textures, etc

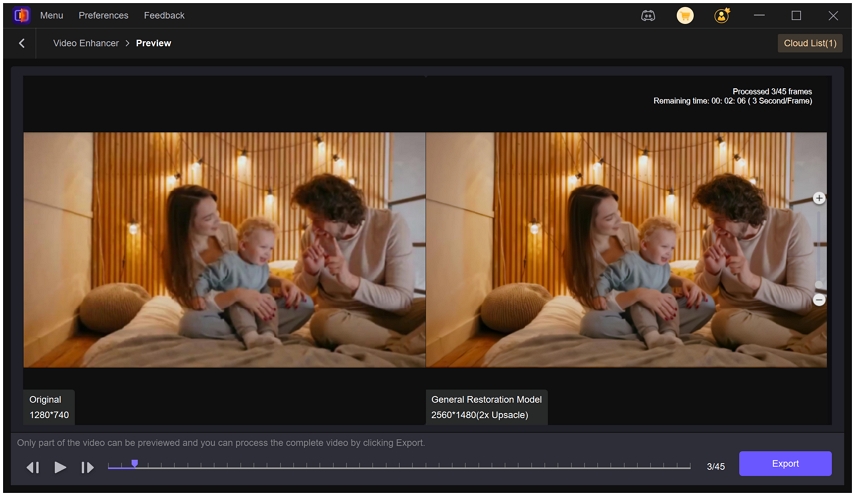

- Real-time Preview: See the AI in action with a split-screen view before you commit to the final export.

Step-by-Step Guide to Upscale Video with GPU

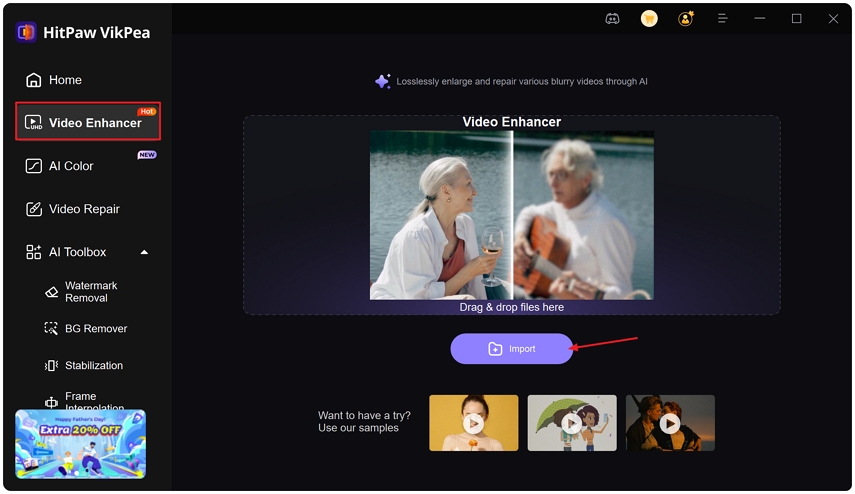

Step 1. Launch & Import:

Open Vikpea and drag your video into the Video Enhancer module.

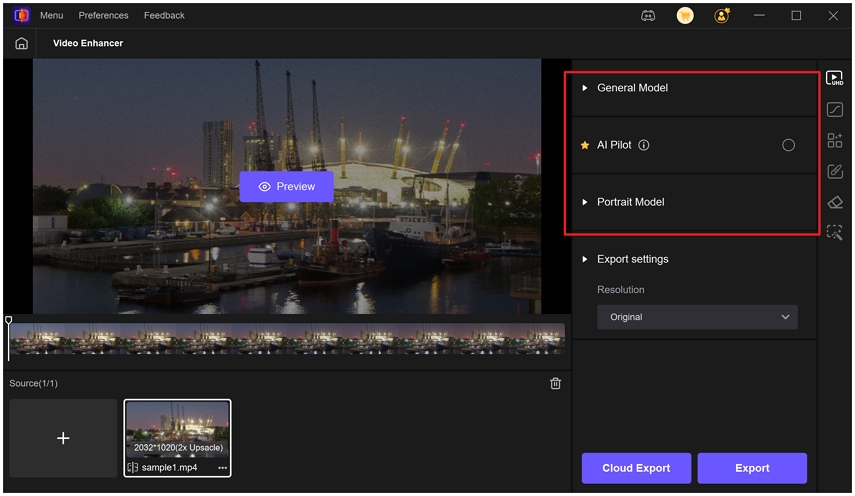

Step 2. Select Model: Choose the AI model that fits your footage (e.g., Portrait for vlogs).

Step 3. Preview: Click Preview button to see a side-by-side real-time preview effects.

Step 4. Export with Acceleration: Click the Export button to start processing the entire video. VikPea will utilize your computer's graphics card by default. If you select the Cloud Export, the process will be accelerated even faster.

Part 4. GPU for AI FAQs

It depends on your scale. For personal use and research, the RTX 4090 is the best AI GPU. For enterprise training, the NVIDIA H200 is the current gold standard.

No. The RTX 4070 has significantly more CUDA cores and higher memory bandwidth, making it much more efficient for deep learning tasks.

At a minimum, aim for a GPU with 8GB of VRAM for basic inference. For training, 24GB is highly recommended.

OpenAI utilizes massive clusters of NVIDIA H100 and A100 GPUs to train models like GPT-4 and Sora.

NVIDIA is currently superior due to the CUDA ecosystem, which is the most widely supported software platform for AI development.

Conclusion

Choosing the best GPU for deep learning or content creation requires balancing VRAM, compute power, and software compatibility. While NVIDIA leads the pack in hardware, tools like VikPea ensure you are getting every bit of performance out of your investment through optimized GPU acceleration.

Leave a Comment

Create your review for HitPaw articles