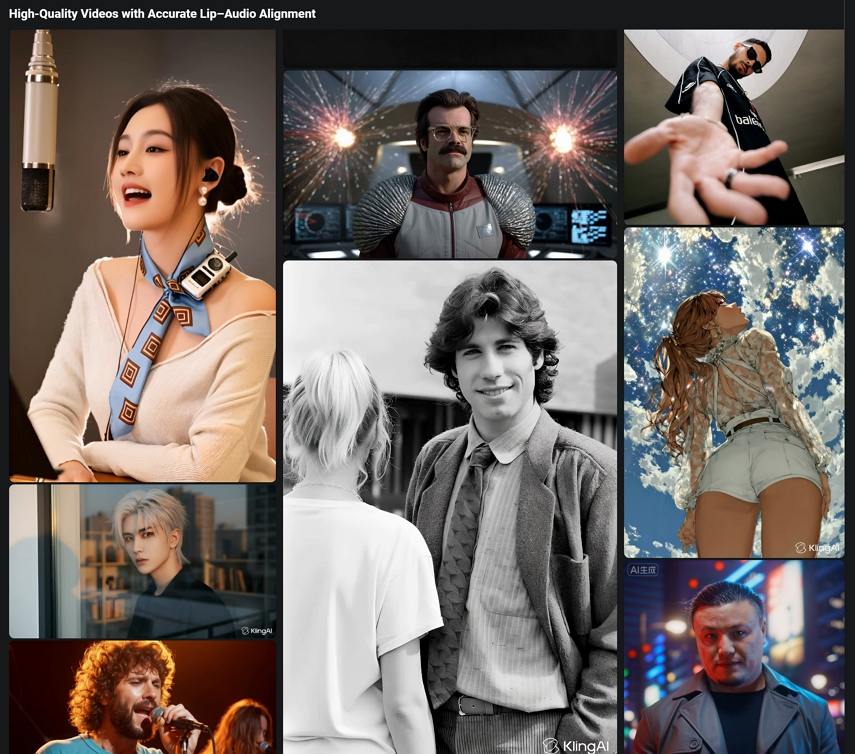

Kling Avatar: Redefining the Future of AI-Generated Humans

In recent years, artificial intelligence has rapidly evolved from generating static images to creating full-length, dynamic videos. Among these advancements, AI-generated avatars-virtual humans capable of speaking, emoting, and performing on screen-have become a focal point of innovation. Yet, even with tools like HeyGen and Synthesia, challenges such as unnatural lip-syncing, rigid expressions, and inconsistent identity remain.

Enter Kling Avatar, a groundbreaking system developed by Kuaishou's AI team, which pushes the boundaries of what's possible with digital humans. Designed to generate hyper-realistic avatars that move and speak naturally, Kling Avatar represents a major leap forward in AI-driven video synthesis.

Part 1. What Is Kling Avatar?

Kling Avatar is a cutting-edge digital human generation model developed by Kuaishou (Kling AI team). Built as part of the broader Kling AI ecosystem, it focuses specifically on the avatar layer-the part responsible for generating talking heads, facial expressions, and emotional performances.

While Kling AI as a whole is capable of generating full cinematic scenes from text prompts, Kling Avatar zooms in on a single aspect: creating highly realistic, long-duration character animations based on image, text, or audio inputs. The result is a talking, expressive avatar that feels convincingly human.

Part 2. How Kling Avatar Works: The Technology Behind It

At its core, Kling Avatar introduces a new paradigm for AI video generation through two key technologies:

- Multimodal Large Language Model (MLLM) Director:

- Cascaded Long-Duration Generation:

This system functions like a digital director. It interprets user prompts-text, voice, or image-and structures them into a "blueprint script." This script defines how the avatar should move, speak, and express emotion across time.

Instead of generating entire videos in one pass (which often causes artifacts and inconsistencies), Kling Avatar creates short, coherent video segments based on keyframes. These segments are later stitched together seamlessly, ensuring consistent identity and natural motion flow throughout long sequences.

The result? Avatars that maintain the same face, voice, and emotional tone even across multi-minute performances-a rare feat in the current AI video generation landscape.

How Kling Avatar Differs from Other AI Avatar Tools

While other AI avatar platforms-such as HeyGen or OmniHuman-offer impressive text-to-speech avatars, Kling Avatar distinguishes itself through its ability to generate long-duration, emotion-rich performances without losing identity or consistency.

Where many systems struggle with flickering faces or robotic lip-syncs, Kling Avatar maintains smooth transitions, natural eye movements, and lifelike body gestures. It also benefits from Kuaishou's large-scale video diffusion infrastructure, giving it a strong technical backbone.

However, Kling Avatar is currently available mainly through research previews and internal testing, meaning most users can only access demos rather than full public tools.

Part 3. Key Features of Kling Avatar

Kling Avatar stands out for its impressive range of features that bring AI-generated characters to life:

- High-Precision Lip Sync:

- Emotional Realism:

- Long-Duration Consistency:

- Multimodal Control:

- Optimized for Real-World Application:

Achieves near-perfect alignment between mouth movement and spoken dialogue, supporting multiple languages and accents.

Captures subtle expressions, eye movement, and gestures to convey authentic emotion.

Maintains the same facial identity, hairstyle, and lighting conditions across minutes of footage.

Accepts text, audio, and image prompts, allowing creators to guide performance style, tone, and pacing.

Suitable for virtual influencers, training videos, marketing content, or digital storytelling.

These capabilities make Kling Avatar one of the most advanced AI avatar generation systems in 2025.

Part 4. Real-World Applications

Kling Avatar opens up a wide range of possibilities across industries:

- Virtual Influencers & Content Creators: Easily create lifelike digital presenters for social media, livestreams, and short-form videos.

- Corporate Training & Marketing: Generate multilingual digital spokespeople for global audiences.

- Film and Entertainment: Use AI avatars for storyboarding, previsualization, or digital doubles.

- Education & Storytelling: Design personalized avatars to teach, narrate, or guide interactive content.

By merging AI creativity with cinematic realism, Kling Avatar bridges the gap between machine-generated content and human emotion.

Part 5. Enhancing Kling Avatar Videos with HitPaw VikPea

While Kling Avatar delivers remarkably realistic digital humans, AI-generated videos can still face common challenges-such as slight blurriness, compression artifacts, or color inconsistencies, especially when exported or shared across platforms.

To make these AI-generated clips look sharper and more cinematic, creators can turn to HitPaw VikPea, a professional AI video enhancer designed to improve resolution, restore details, and enhance visual quality effortlessly.

What HitPaw VikPea Does:

- Upscales videos from 720p to 4K without losing texture.

- Reduces compression noise and smooths diffusion-based artifacts.

- Restores facial details for digital humans and ensures smooth motion between frames.

- Repair corrupted videos, remove background and stabilize videos.

How to Use HitPaw VikPea to Enhance Kling Avatar Videos:

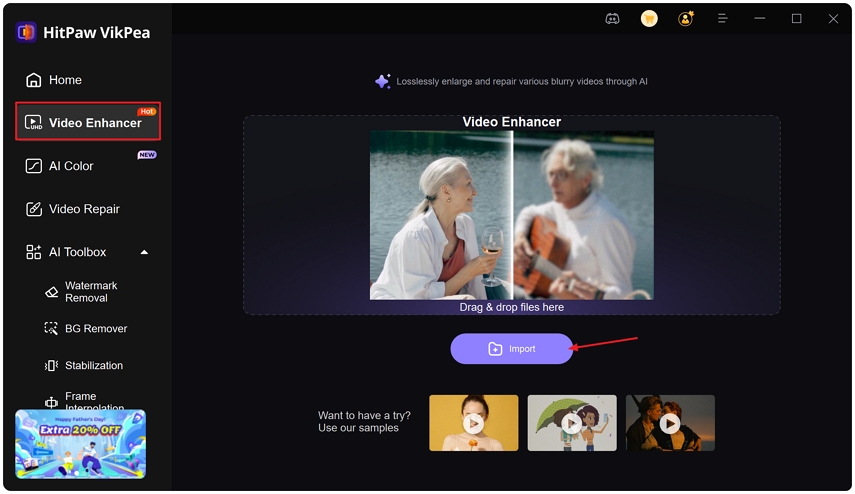

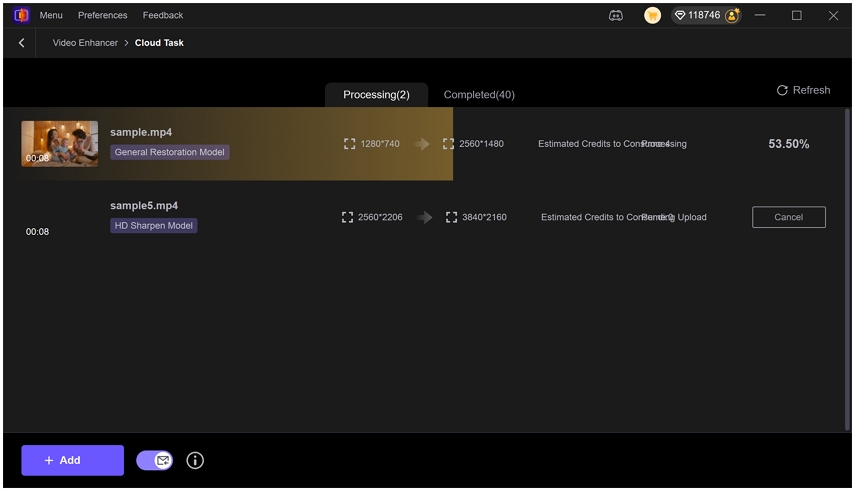

Step 1.Launch HitPaw VikPea, and choose Video Enhancer from the left sidebar. Import your Kling Avatar video.

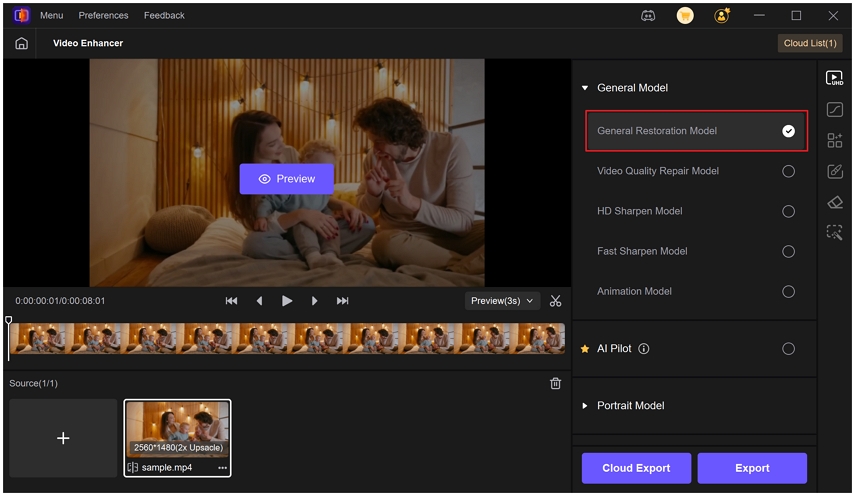

Step 2.Select an enhancement model. for example, the "General Restoration Enhancement" model for overall quality improvement, or the "Portraint Model" for digital avatars.

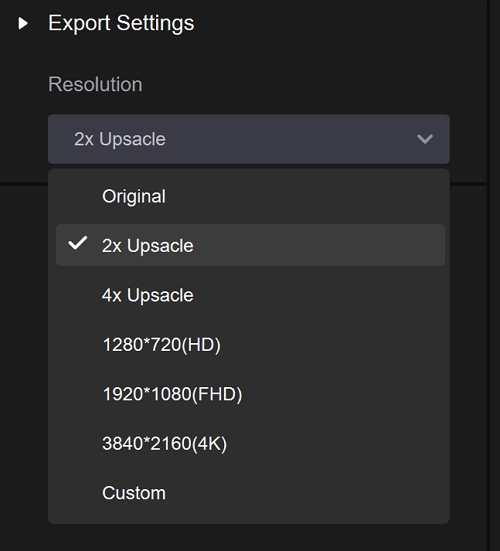

Step 3.Set the output settings such as output resolution, bit rate, format, save path, etc.

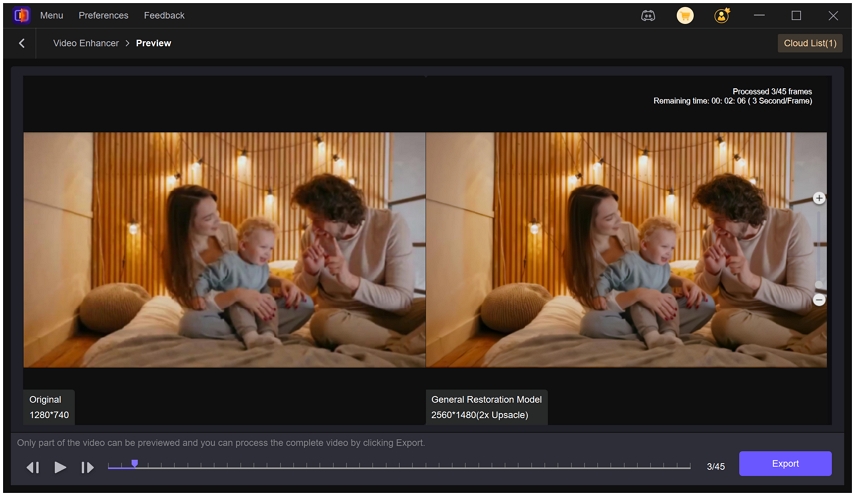

Step 4.Click Preview button to see a split-screen comparison.

Step 5.All is fine, you can click Export button to save the enhanced video.

This process helps AI-generated avatars appear more natural and professional, perfect for social media creators, marketers, or video editors seeking higher production quality.

Conclusion: The Future of AI-Driven Digital Humans

Kling Avatar marks a defining moment in the evolution of AI video generation. By combining multimodal input, emotional intelligence, and long-duration stability, it brings digital humans closer than ever to cinematic realism.

As this technology continues to mature, the line between human and AI performance will blur even further-unlocking new opportunities for content creation, storytelling, and personalization.

And for creators who want their AI avatars to look even sharper and more lifelike, tools like HitPaw VikPea can be the perfect finishing touch-transforming raw AI outputs into visually stunning, studio-quality videos.

Leave a Comment

Create your review for HitPaw articles