3D Models in ComfyUI: Best Generators, Image-to-3D Workflows & Enhancement

ComfyUI has quickly become a go-to interface for those of us who need to create and experiment with advanced AI workflows - particularly for creators working with 3d models. The fact that it can take an image and turn it into a 3D asset, or that it can be used to test out experimental generators - all this just makes ComfyUI a more flexible option than a lot of the more traditional tools out there. This article is going to introduce the 3D models that ComfyUI users are currently loving, explain a bit about how ComfyUI 3D model generation works, and show how forthcoming integration with HitPaw API can take 3D model renders to the next level with some pretty pro-level photo enhancement.

Part 1. What Are 3D Models in ComfyUI?

A typical ComfyUI 3d model workflow is built around combining a few different components - diffusion models, depth estimation, and mesh reconstruction nodes. Now this is where it gets interesting - ComfyUI isn't just a one-click generator, but rather it lets creators build pipelines visually for tasks like converting an image into a 3D model, refining it, and then rendering it.

This flexibility is part of the reason why ComfyUI 3D models are so popular among developers, game artists, and researchers who are all working with AI-driven 3D content.

Part 2. Popular 3D Model Types Available in ComfyUI

Below are some of the popular model categories and pipelines, rather than a 'download and run' model - though that would be cool too.

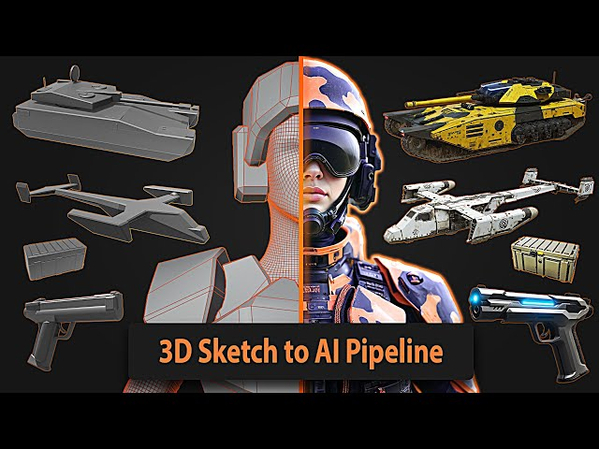

1. Image-to-3D Pipelines

These are pipelines that focus on converting an image into a 3D model using ComfyUI. Typically, you'll start with a single image (or even a few views), and then the system will use that to estimate some depth and geometry, producing a rough mesh.

Some use cases for this approach include:

- Creating product mockups

- Building character busts

- Concept prop designs

This way of working is a direct answer to the demand for 3D models that start from an image.

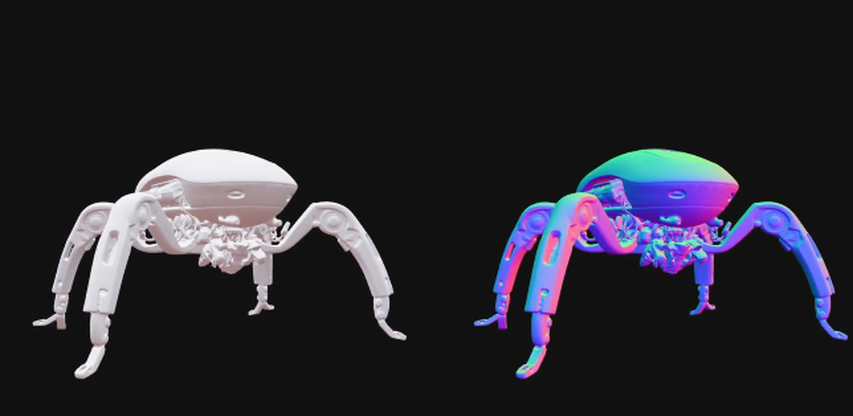

2. Multi-View Diffusion Models

There are also some more advanced setups that will generate multiple views of an object before reconstructing the geometry. Ones that do this are often talked about when discussing the 'best 3D models ComfyUI has to offer' - the reason for this is that they help improve structural consistency.

The advantages of this approach are:

- Better depth accuracy

- Less distortion

- You end up with a more usable mesh

3. Experimental Free 3D Models

A lot of people out there are creating ComfyUI free 3D model workflows, which they share, using a mix of open-source components. These are great for learning and experimentation, even if your final output isn't ready for production yet.

This means that working with 3D models in ComfyUI is actually pretty accessible, even if you don't have the budget for licensing.

Part 3. How ComfyUI 3D Model Generation Works

So how does ComfyUI generate 3D models? At a high level, it looks like this:

- You give the system an input image or a prompt to work from

- Diffusion-based view synthesis takes place (basically, it generates a bunch of views of an object)

- The system then tries to work out some depth or normal data

- It uses that data to reconstruct a mesh

- Finally, texture projection can be added to make the final 3D model look more realistic

This modular way of doing things means that creators can go in and swap out different components to experiment with different methods, which is precisely why ComfyUI 3D model generator workflows are all over the place in terms of speed and quality.

Limitations of ComfyUI 3D Outputs

While comfyui 3D models are incredibly powerful, they still come up against a few hurdles:

- Textures can end up looking soft and blurry

- Generated renders can have a lot of noise

- Preview images often aren't in high enough resolution

- Lighting can get pretty inconsistent.

That's where post-processing comes in - especially if you're looking to put your work out there or publish results.

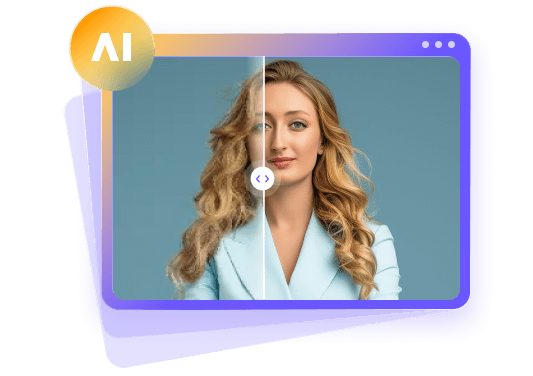

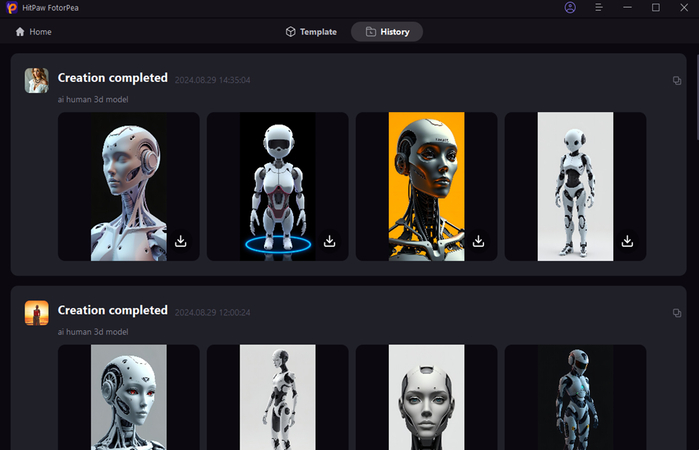

Part 4. Taking 3D Model Renders to the Next Level with HitPaw API (Coming to ComfyUI)

We've integrated HitPaw's Photo Enhancer API so creatives can improve the image clarity, texture detail, and overall look of their renders from comfyui 3D model workflows right inside their pipeline.

The benefits of this are pretty clear:

- Your 3D previews will look sharper and more detailed

- AI-generated renders will have way less noise

- Lighting will be more balanced

- Your results will look way better in portfolios and demos

Instead of having to re-render or manually fiddle with screenshots, you can just pass those renders through the HitPaw enhancer and watch your image quality shoot up on the spot.

Putting it All Together - Image-to-3D + Enhancement

Here's a pretty typical workflow:

- You whip up a mesh using comfyui Image to 3D Model - or one of the other tools you like

- You slap up some preview images of your model

- Then you send those previews off to the HitPaw Photo Enhancer API

- Outcomes are much cleaner, much sharper visuals that you can use for a presentation

This makes comfyui 3D model generation a heck of a lot more practical for real-world use - especially when you're dealing with clients or teams who are looking at your work visually.

Why This Matters to Creators

The more you use comfyui 3D models in your workflow, the more the quality of your presentation becomes just as important as how accurate your geometry is. Enhanced renders let:

- You communicate your ideas way more clearly

- Your models look way better to the people you're showing them to

- Feedback cycles speed up because there's less to nitpick over

So when it comes to ComfyUI's flexibility and HitPaw's enhancement tech, the two of them come together to bridge that gap between experimentation and actually producing professional-looking stuff.

So Who's Going to Be Using This Workflow?

- Researchers testing out comfyui 3D models

- Indie game devs looking to prototype some new assets

- Product designers who want to visualise their ideas

- Creators who are poking around with comfyui free 3D model pipelines

If image quality is a priority - and that's a pretty big if - then enhancement isn't just an option, it's a necessity.

Leave a Comment

Create your review for HitPaw articles