PixVerse R1 Guide: Exploring the First Real-Time World Model

AI video generation has traditionally followed a simple pattern: enter a prompt, wait for rendering, then export a finished clip. While effective, this approach limits interactivity and creative flexibility. PixVerse R1 introduces a fundamentally different paradigm-one where video is generated continuously and responds to user input in real time.

Instead of producing a fixed video file, PixVerse R1 creates a live, evolving visual world that can be guided, adjusted, and reshaped as it runs. This shift from pre-rendered output to real-time interaction marks an important step forward in how AI-generated video can be created, explored, and experienced.

Part 1. What Is PixVerse R1?

PixVerse R1 is a real-time AI world model that generates interactive video continuously rather than producing a fixed, pre-rendered clip.

At its core, PixVerse R1 represents a major technical evolution from earlier AI video models. Instead of treating video as a static result of a single prompt, R1 treats video as a living system-one that reacts instantly to instructions, remembers context, and evolves frame by frame without a predefined end.

This approach enables creators to explore scenes dynamically, experiment visually, and guide outcomes in ways that were not possible with earlier PixVerse versions or traditional AI video generators.

Core Technical Framework

- Omni-Native Multimodal Foundation: PixVerse R1 is built to process text, images, video, and audio within a single unified framework. This allows for seamless fusion where an audio cue can instantly change the visual mood of a scene.

- Autoregressive & Memory Mechanisms: Unlike models that "forget" previous frames, R1 utilizes advanced memory mechanisms to maintain logical consistency over long durations, ensuring that characters and environments remain stable.

- Instant Response Engine: The model features a low-latency architecture designed for "What You See Is What You Get" (WYSIWYG) interaction, delivering real-time video streams rather than making users wait for a rendering bar.

Key Features of PixVerse R1

- Real-Time Streaming: Generates infinite video length without fixed segment constraints.

- Multimodal Input: Supports simultaneous input of text, image, and audio to guide the creative process.

- 1080P High-Definition: Delivers crisp, high-quality visuals that rival traditional pre-rendered models.

- Interactive World Building: Users can edit scene elements in real-time, treating the video as a living environment.

- All-in-One Toolkit: Integrated Text-to-Video, Image-to-Video, and Image-to-Image capabilities.

Part 2. How the PixVerse R1 Real-Time World Model Works

PixVerse R1 operates through a continuous interaction loop rather than a one-time generation process. Users guide the model step by step while the video is actively running.

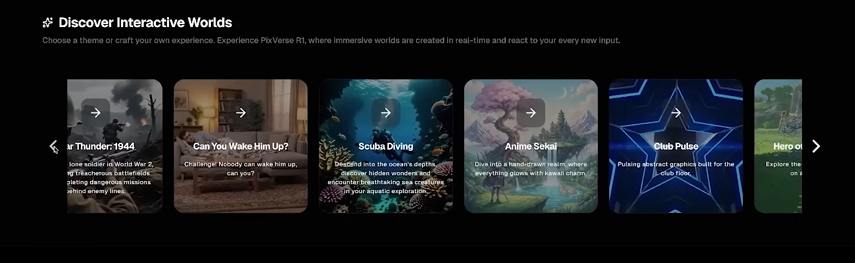

Step 1: Choose a Theme

Start by choosing a theme or crafting a concept based on your own experience, imagination, or creative goal. This theme defines the overall direction of the world-such as atmosphere, setting, or narrative tone-rather than a fixed storyline or shot list.

Step 2: Initialize the World

The creator starts by providing an initial prompt or visual reference to define the environment, style, or narrative direction.

Step 3: Real-Time Scene Generation

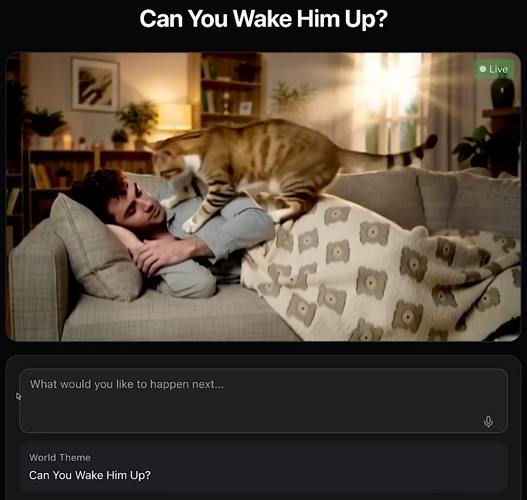

PixVerse R1 begins generating video instantly, creating a live visual world instead of a finished clip.

Step 4: Live Interaction and Control

Additional prompts or inputs can be introduced at any time to change characters, environments, motion, or mood. The system responds immediately.

Step 5: Continuous Evolution

The world continues evolving as long as interaction continues, maintaining contextual memory and visual coherence across time.

Part 3. What PixVerse R1 Is Best Used For (and What It Isn't)

PixVerse R1 signals a broader industry shift toward interactive AI media, moving beyond pre-rendered videos into real-time experiences. Compared to traditional AI video models, it emphasizes exploration and experimentation over predictability and speed.

Ideal Use Cases

- Interactive storytelling and narrative exploration

- Experimental visual worlds and concept prototyping

- AI-driven simulations and virtual environments

- Research and early-stage creative development

As real-time world models mature, they are expected to influence future applications in immersive media, AI-assisted game design, and interactive entertainment.

Current Limitations of PixVerse R1

- Still an early-stage technology

- Higher computational and hardware requirements

- Less predictable final results compared to pre-rendered models

- Not always suitable for fast, deadline-driven commercial production

Part 4. Pro Tips: How HitPaw VikPea Complements PixVerse R1

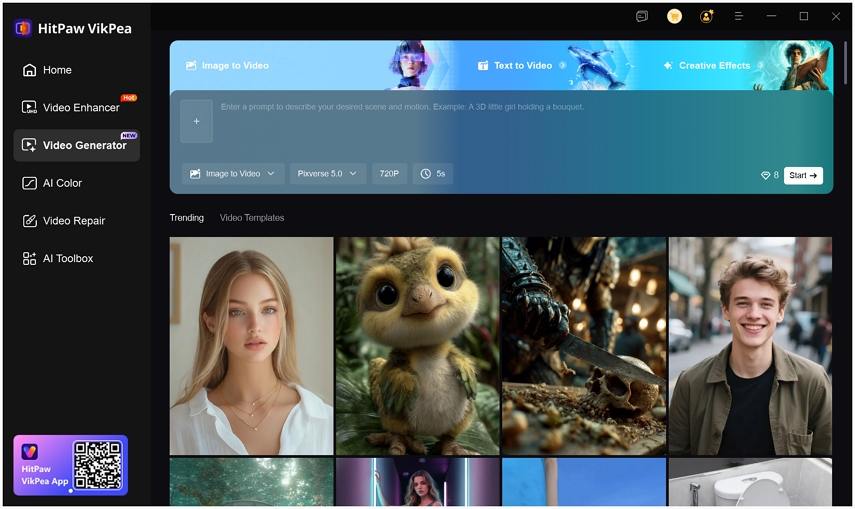

While PixVerse R1 excels at real-time exploration, many creators still need efficient tools for producing polished, share-ready videos. HitPaw VikPea fills this gap by offering a flexible AI video generator that integrates multiple established models within one workflow.

Instead of focusing on real-time interaction, VikPea allows creators to choose from several powerful AI engines-such as Kling O1, Kling 2.6, PixVerse 5.5, VEO 3, and Seedance 1.0 Pro-to generate videos optimized for different styles and platforms.

Feature Highlights:

- Multi-Model Integration: Access a curated list of top-tier models like Kling O1, Kling 2.6, PixVerse 5.5, VEO 3, and Seedance 1.0 Pro.

- Versatile Creation: Supports Text-to-Video, Image-to-Video, and popular templates to recreate trending styles.

- Social Media Ready: Choose different aspect ratios (9:16, 16:9, 1:1) for instant posting to TikTok, Instagram, or X.

- 8K AI Enhancement: Includes a built-in AI Video Enhancer to upscale your generated videos up to 8K resolution.

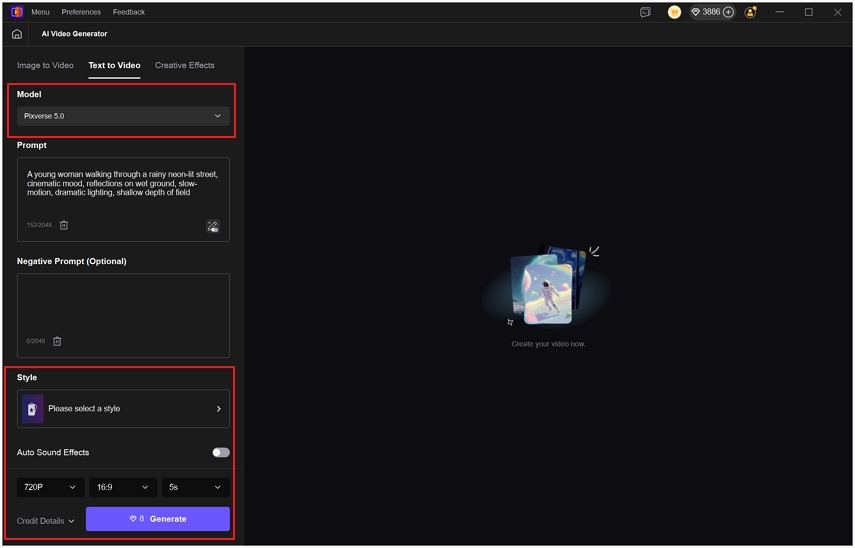

Step 1: Choose AI Video Generator Feature

On the main interface, choose Video Generator module. Select Image to Video, Text to Video or Creative Effects to get started.

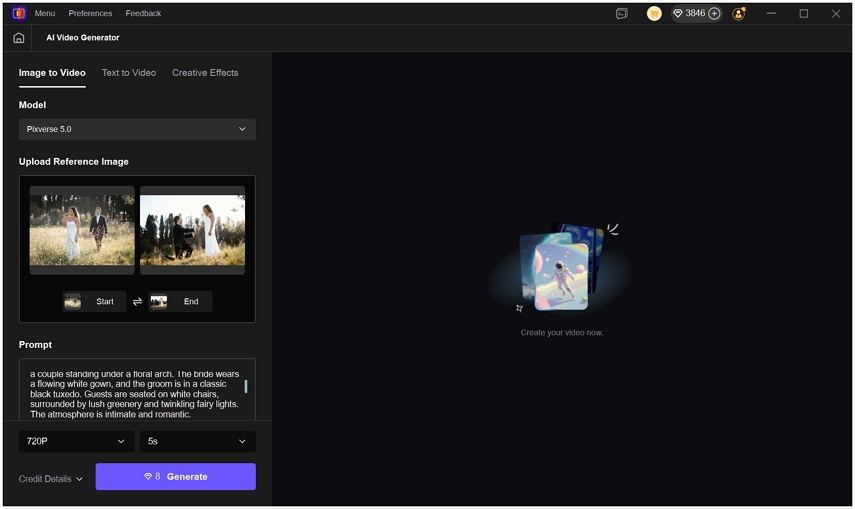

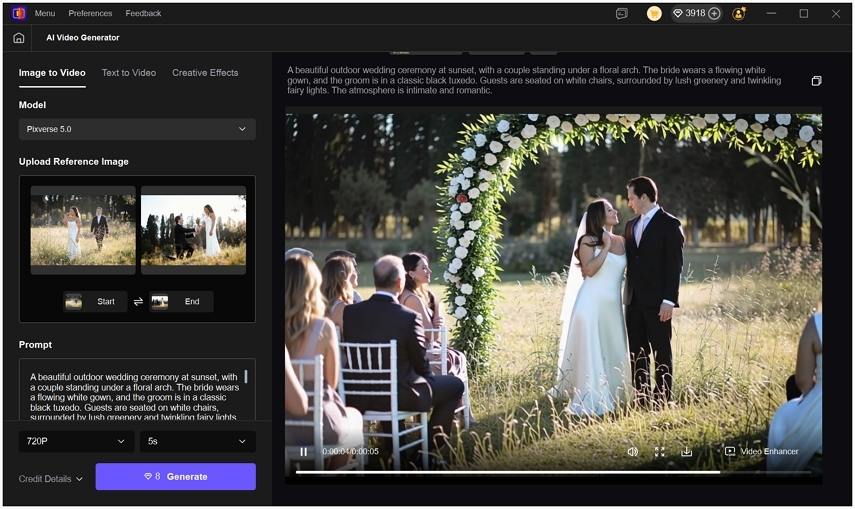

Step 2: Upload Image and Input Prompt

Upload references image(s) as the start frame and end frame. Then enter the descriptive prompts.

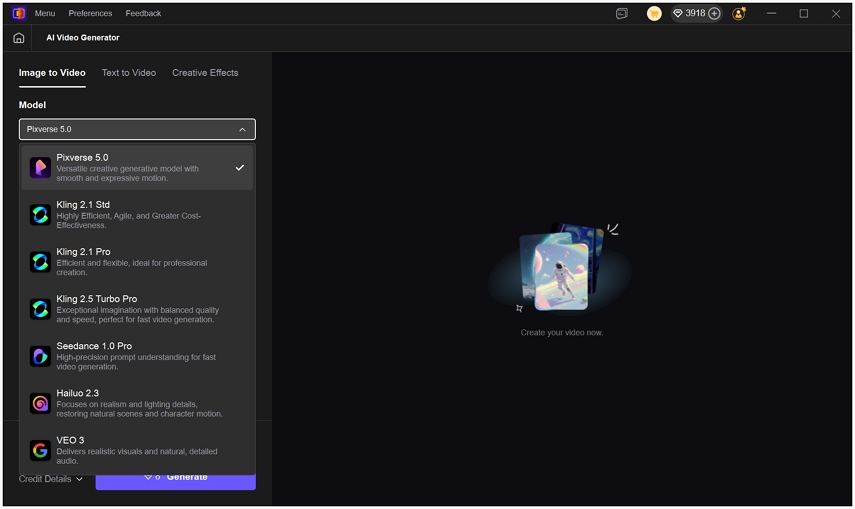

Step 3. Select an AI Video Model

The default model is Pixverse, you can choose the model that best fits your creative goal, such as Kling, Pixverse, Hailuo, etc.

Step 4: Customize Video Settings

Choose a video style, aspect ratio, resolution and format match your target platform.

Step 5: Generate and Export

Click Generate to start video creation. Once the process competes, you can preview the AI video. Click the download icon to save it to computer, or click Video Enhancer for future polishing.

Part 5. PixVerse R1 FAQs

PixVerse R1 currently offers limited access depending on platform availability, with usage often tied to experimental or early-access programs.

Not entirely. PixVerse R1 excels at real-time interaction, while traditional AI video generators remain more practical for fast, consistent production.

Real-time AI video evolves continuously and responds instantly to input, while pre-rendered AI video generates a fixed result after processing.

Conclusion

PixVerse R1 represents a significant step toward real-time, interactive AI video creation, redefining how visual content can be explored and shaped. However, practical video production still relies heavily on pre-rendered workflows for speed and consistency.

By combining real-time experimentation with flexible, multi-model AI video generators like HitPaw VikPea, creators can explore new ideas freely while maintaining efficient production pipelines. This balanced approach reflects where AI video creation is heading-toward both innovation and usability.

Leave a Comment

Create your review for HitPaw articles