Google Gemini AI Try-On: Selfie-Based Virtual Try-On by Gemini

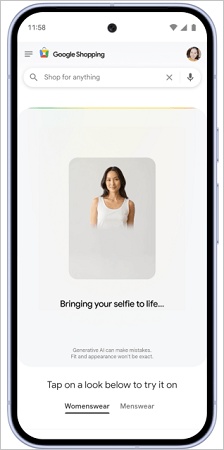

Google's AI-powered virtual try-on technology has taken a major leap forward. On December 11, Google announced a significant upgrade to its AI try-on experience, allowing users to try on clothes using just a single selfie. Previously, users were required to upload a full-body photo, which created friction and limited adoption.

This upgrade is powered by Gemini 2.5 Flash Image, specifically its lightweight yet powerful image generation component often referred to as Nano Banana. With this model, Google can generate a realistic, full-body digital avatar from a single selfie, enabling more accessible, personalized, and scalable AI try-on experiences across Google Shopping and Search.

This article provides a comprehensive breakdown of the Google Gemini AI try-on update, including its underlying technology, a comparison to earlier virtual try-on systems, and guidance on how creators and brands can replicate similar workflows using modern AI image generation tools.

Part 1: What Is Google Gemini AI Try-On

Google Gemini AI try-on is not a standalone app or a consumer-facing product branded under "Gemini." Instead, it is an AI-driven capability embedded into Google Shopping and Search, designed to help users visualize how apparel might look on them before making a purchase.

At its core, the try-on experience combines:

- Product imagery provided by retailers

- User-provided visual input (selfie or full-body photo)

- Generative image models that synthesize the two into a realistic preview

How the Selfie-Based Try-On Works

Before this update, Google's AI try-on system relied on a relatively straightforward approach: users uploaded a full-body image, and the system attempted to overlay clothing in a way that preserved posture, proportions, and general appearance. The December 11 announcement introduced a fundamentally different input paradigm. Now, users can upload:

- A single selfie

- Select a size reference

- And let Google'sAI generate a usable full-body digital representation

Behind the scenes, the system creates multiple candidate images inferred from the selfie. Users can choose the version that feels most representative, and that image becomes the basis for virtual try-on. This shift solves several long-standing issues at once:

- It removes the need for mirrors or tripod setups

- It works far better on mobile devices

- It encourages casual experimentation rather than commitment-heavy uploads

From a product perspective, this change aligns perfectly with Google's broader strategy: reduce user effort, increase engagement, and let AI handle complexity invisibly.

Why This Update Matters for the Broader AI Try-On Landscape

The December update does not change the goal of the system. What it changes is how much information the user must provide in order to get a personalized result.

Previously, requiring a full-body photo created a practical barrier. Many users were unwilling or unable to upload such images due to privacy concerns, camera limitations, or simple inconvenience. By allowing a single selfie to serve as the starting point, Google dramatically lowers friction while keeping the experience personalized. Instead of relying on rigid 3D avatars or manually tuned mannequins, Google is betting on:

- Generative image models

- Probabilistic inference

- Scalable, data-driven realism

This signals a broader industry shift. Virtual try-on is no longer primarily a 3D modeling problem. It is increasingly an AI image generation problem, where realism emerges from data and model training rather than handcrafted assets.

As these models improve, we can expect try-on experiences to expand beyond shopping into:

- Fashion design previews

- Social content creation

- Influencer marketing

- Virtual styling tools

Part 2: Gemini 2.5 Flash Image and the Role of Nano Banana

The technological foundation of this update lies in Gemini 2.5 Flash Image, Google's fast, multimodal image generation and editing model. Unlike large, slower generative models designed for artistic exploration, Flash Image models are optimized for:

- Low latency

- High consistency

- Scalable consumer deployment

- Image-to-image transformations

Within this system, Nano Banana refers to a highly efficient image-generation pathway used when the task requires generating realistic images from limited visual input.

In the context of AI try-on, Nano Banana enables several critical capabilities:

- Inferring plausible full-body structure from facial cues

- Maintaining identity consistency across generated outputs

- Producing images that look coherent under clothing overlays

- Doing all of this quickly enough for real-time shopping experiences

Crucially, the model is not reconstructing a biometric replica of the user. Instead, it generates a statistically plausible human figure that aligns with the user's selfie, selected size, and general proportions. This design choice balances realism, performance, and privacy.

Part 3: Accuracy, Realism, and the Limits of AI Try-On

One of the most common questions surrounding Google Gemini AI try-on is accuracy. How "real" is the result, and how much should users trust it? Google has been careful not to overpromise. The generated try-on images are visually convincing, particularly for understanding:

- How a garment hangs

- How colors and patterns appear

- How an outfit looks in context

However, they are not meant to guarantee fit down to the centimeter. Fabric stretch, personal posture habits, and subtle body variations remain outside the scope of current generative models.

This distinction is important. By framing AI try-on as a decision-support visualization tool, Google avoids misleading users while still delivering substantial value.

From a user-experience standpoint, this approach works. The goal is not perfection-it is confidence.

Part 4: A Practical AI Try-On Solution Using AI Image Generation

For users interested in experimenting with AI try-on outside Google's closed environment, HitPaw FotorPea offers a practical, image-generation-based solution aligned with the same core ideas. Rather than focusing on rigid avatars, this approach emphasizes AI-generated human imagery combined with clothing visualization, making it suitable for creative try-on use cases.

How This Tool Fits the AI Try-On Concept

The platform supports:

- AI image generation for full-body human visuals

- Image-to-image transformation for clothing application

- Refinement tools to improve realism and presentation

This makes it useful for:

- Visualizing outfits

- Creating virtual try-on mockups

- Producing fashion-related marketing content

Core Capabilities for AI Try-On Scenarios

- AI Image Generation: Generate realistic human figures that can act as the base for virtual try-on, similar in concept to how Gemini models infer full-body visuals.

- Image-to-Image Clothing Visualization: Apply apparel images to generated human figures, allowing users to preview how outfits might appear.

- AI Photo Enhancement: Improve lighting, clarity, and visual consistency, which is essential for believable try-on images.

- Background and Composition Control: Adjust or remove backgrounds to create clean, product-ready visuals.

- A Simple AI Try-On Workflow: Start with a selfie or a generated human image, apply clothing visuals through AI image generation, refine the result using enhancement tools, and export a polished image suitable for preview or presentation.

This mirrors the logic of Google Gemini AI try-on, adapted for broader creative and experimental use.

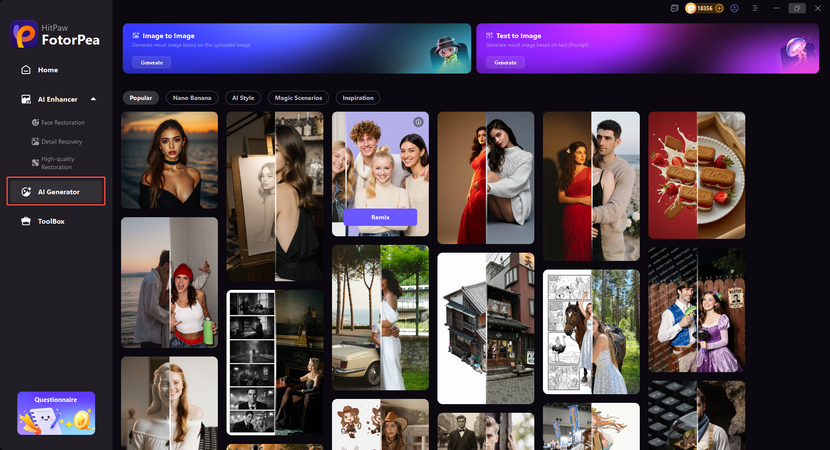

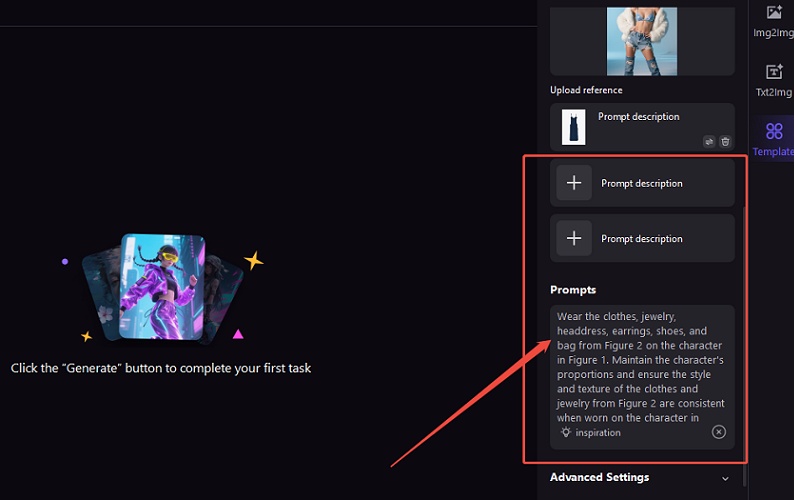

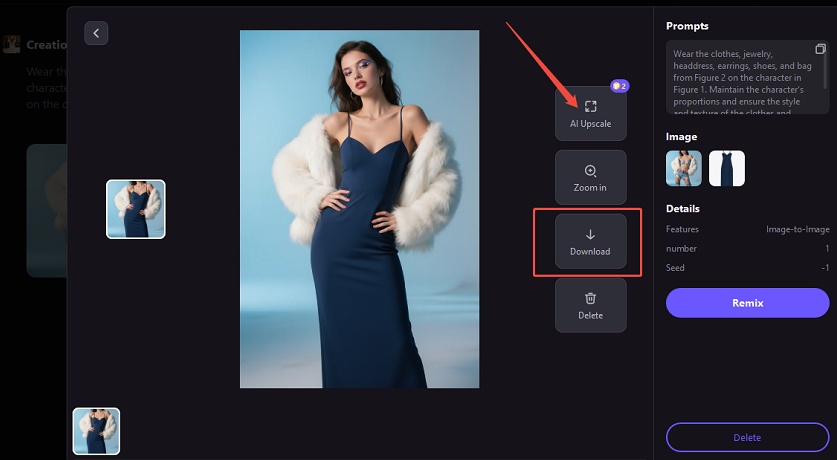

How to Use AI Try-On Feature in HitPaw FotorPea

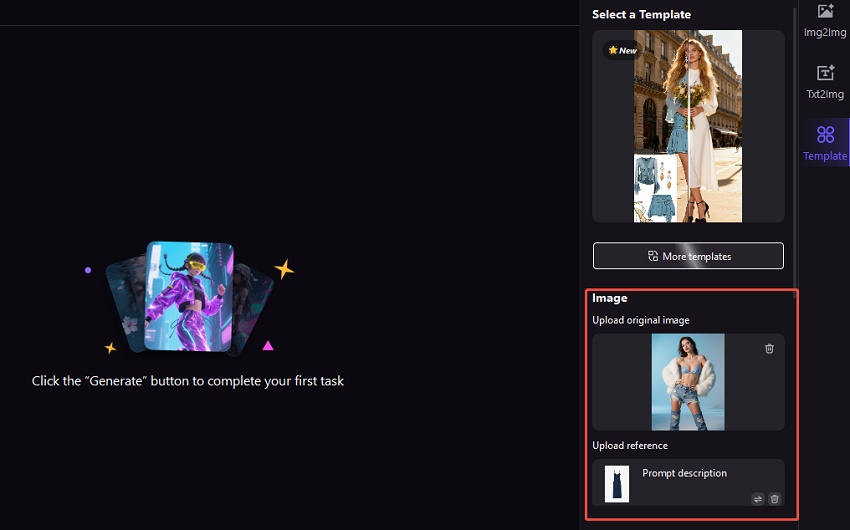

Step 1.Launch HitPaw FotorPea and navigate to the AI Generator. You can choose "Image to Image" for try-on, or "Text to Image" to create a new try-on model, or use our AI try-on templates directly.

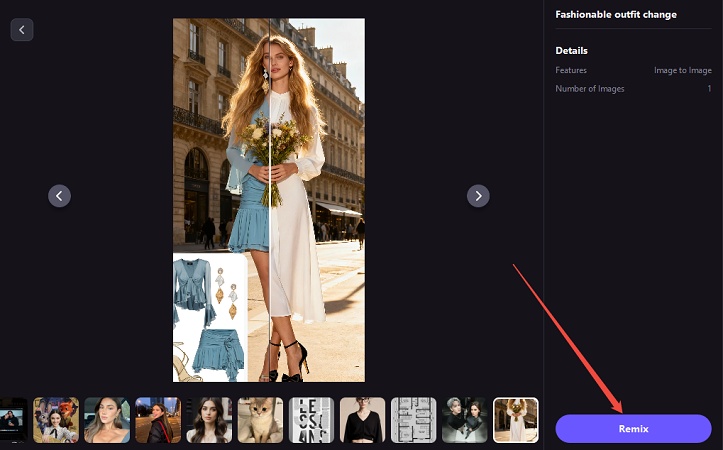

Step 2.Upload a selfie or a generated human image that will act as the base model. Use AI image generation or existing apparel images as input.

Step 3.After importing images, just describe what you want. You can just use our preset text prompt, and the AI will create your image.

Step 4.AI will visualize clothing on the model. Next, you can enhance the try-on image using the built-in enhancer feature. Or just download high-resolution images for marketing and testing.

This approach mirrors the conceptual workflow behind Google Gemini AI try-on, adapted for broader creative use.

Part 5: How Google Gemini AI Try-On Compares to Traditional Virtual Try-On Solutions

Most legacy try-on tools relied on:

- Pre-built 3D mannequins

- Manual size selection

- Rigid overlays that failed under real-world variation

These systems often felt artificial and inaccurate.

Gemini AI Try-On Advantages

Compared to traditional systems, Gemini AI try-on offers:

- AI-generated human models instead of static avatars

- Identity-aware rendering based on real user input

- Faster iteration using generative image models

- Seamless integration into search-based shopping flows

This marks a shift from template-based try-on to generative try-on.

Use Cases Beyond Online Shopping

Although currently focused on e-commerce, the underlying Gemini AI try-on technology enables broader applications:

- Fashion design prototyping

- Influencer outfit previews

- Virtual styling consultations

- Marketing visuals for apparel brands

- Personal wardrobe visualization

At its core, this is an AI image generation workflow optimized for human-clothing interaction, not just shopping.

FAQ: Google Gemini AI Try-On

It is an AI-powered virtual try-on system that allows users to see how clothes look on them using AI-generated human models.

No. The latest update allows users to upload just a selfie.

It is powered by Gemini 2.5 Flash Image, including the Nano Banana image generation component.

It is visually realistic but designed for fashion visualization rather than precise body measurement.

Conclusion

Google Gemini AI try-on represents a clear shift toward more accessible and scalable virtual try-on experiences. By enabling selfie-based try-on with Gemini 2.5 Flash Image and Nano Banana, Google shows how AI image generation can reduce friction while maintaining convincing visual personalization.

This update also reflects a broader trend: modern AI try-on is increasingly driven by generative image models rather than rigid 3D avatars. For users who want to explore similar workflows outside Google's ecosystem, tools like HitPaw FotorPea provide a practical way to experiment with AI try-on using AI image generation and image-to-image visualization. Whether for fashion previews, creative projects, or marketing visuals, it offers an accessible entry point into AI-powered virtual try-on.

Leave a Comment

Create your review for HitPaw articles