Google AI Glasses: Everything You Need to Know About Google's New AI Wearable

At Google I/O 2025, Google officially unveiled its upcoming AI-powered smart glasses, marking the company's biggest return to wearable computing since the original Google Glass. These new Google AI glasses run on Android XR and are tightly integrated with Gemini, Google's multimodal AI assistant, promising hands-free AI help, real-time translation, and optional in-lens displays. Early partner designs and developer tools point to a broad ecosystem of form factors and use cases.

Part 1. What are Google AI Glasses?

Google AI glasses are a new class of Google AI smart glasses that blend subtle augmented reality with conversational AI. These AI Google glasses act as a wearable companion that surfaces helpful information in your field of view, listens and responds via Gemini, and pairs with Android phones for extra compute and connectivity. The concept focuses on being light, stylish, and useful for everyday tasks while delivering privacy-first AI assistance.

Key Specifications of Google AI Glasses

- Display Options: Available in three versions: no display, monocular, and binocular.

- AI Integration: Powered by the Gemini AI assistant for contextual responses.

- Connectivity: Designed to work seamlessly with Android smartphones.

- Battery Life: Targeted for all-day usage on a single charge.

- Prescription Support: Compatible with prescription lenses through partner eyewear brands.

- Weight: Lightweight design for comfortable all-day wear.

Google AI Glasses Features: What Makes Them Special

- Gemini Integration: The glasses use Gemini to interpret what you see and hear for contextual assistance.

- Multimodal Capabilities: Cameras, microphones, and speakers process voice, visual, and environment data simultaneously.

- In-Lens Display Optional: Select models have a discreet in-lens display for private notifications and navigation.

- Real-Time Translation: Live subtitles and translated audio enable conversations across many languages.

- Voice-Activated Photography: Capture photos and videos hands-free with voice commands.

- Navigation and Messaging: Turn-by-turn directions and message previews delivered via lens or audio prompts.

Part 2. What Can Google AI Glasses Do?

Google AI glass tools are designed to enhance productivity, learning, and daily convenience by combining small glanceable displays with Gemini intelligence. For professionals the glasses can surface critical information without interrupting workflows; for everyday users they act as intelligent assistants for travel, shopping, fitness, and entertainment. Expect use cases that prioritize hands-free workflows, privacy controls, and accessible features for travel and communication.

For Professionals

- Healthcare: Display patient context and procedure prompts for safer bedside care.

- Education: Offer immersive lessons and language immersion overlays for learners.

- Manufacturing: Provide step-by-step assembly instructions and real-time quality checks.

- Retail: Show product details, inventory overlays, and pricing info to sales staff.

For Everyday Users

- Travel: Offer live translation, turn-by-turn navigation, and cultural tips on the go.

- Shopping: Run quick price comparisons, show product reviews, and surface deals.

- Fitness: Deliver workout guidance, count reps, and monitor performance metrics.

- Entertainment: Power AR games, immersive clips, and social sharing from live POV recordings.

Part 3. When Are Google AI Glasses Coming Out and Availability?

Google has stated that Android XR powered glasses will arrive in 2025, with developer tools and early hardware access rolling out first. Google is working with partner brands to refine stylish frames and prescription support before a broader public launch. Some reports and partner roadmaps suggest staged availability in select markets in late 2025 followed by wider distribution in 2026.

Google AI Glasses Price

Google has not published official Google AI glasses price details. Analysts and industry observers estimate price bands based on display options and competing devices:

- Basic Model (no display): $299 to $499.

- Monocular Model: $699 to $999.

- Binocular Model: $1,299 to $1,799.

These estimates reflect likely manufacturing costs, premium optics, and Google's strategy compared with existing smart glasses and AR headsets. Final Google AI glasses price will vary by region and optional prescription or fashion partnerships.

Part 4. Google AI Glasses vs Meta Ray-Ban Smart Glasses vs Apple Vision Pro

The three approaches to wearable AR differ in ambition, price, and design philosophy. Below is a comparison sheet highlighting likely strengths and trade offs.

Google AI Glasses

Meta Ray-Ban Smart Glasses

Apple Vision Pro

Focus

AI assistant and everyday wearability with Gemini

Social AR and lightweight style, social features

High end spatial computing and immersive experiences

Display options

No display / monocular / binocular (optional in-lens HUD)

Mostly monocular, lightweight HUD

Wide field binocular micro-OLED immersive display

Ecosystem

Android XR, Gemini, partner eyewear brands

Meta ecosystem, social apps, partnerships

Apple ecosystem, deep device integration

Best for

Hands-free assistant, translation, navigation, productivity

Casual AR, social, camera-first experiences

Pro-level spatial apps and entertainment

Likely price range

$299 to $1,799 (estimates vary by model)

$299 and up depending on model

Premium, $2,000+ range

Prescription support

Partner frames and prescription lenses

Limited partner options

Strong optical and comfort focus

Privacy approach

On-device processing emphasis, opt-in experiences

Social features with profile focus

Hardware-level controls, per-app privacy settings

Further Reading: Make AI Glass Footage Look Its Best with HitPaw VikPea

AI glasses are great for point-of-view clips, travel vlogs, and quick captures, but wearable cameras often produce footage that needs polishing. If you record with Google AI glasses or another smart wearable and want to improve clarity, stabilize shaky clips, or upscale low-resolution footage, HitPaw VikPea is a helpful option. VikPea offers multiple AI models that restore detail, sharpen edges, and reduce noise so your smart glass videos look clearer on larger screens and social platforms. Below are top features and an easy three-step workflow to get started.

- Upscales footage to 4K or 8K while preserving natural motion and detail.

- AI noise reduction removes grain but keeps fine textures intact.

- Sharpen model refines edges without introducing harsh artifacts.

- Portrait model restores facial detail and natural skin tones in close ups.

- Frame repair corrects jitter and smooths dropped frames for fluid playback.

- Batch export speeds up processing of multiple clips with GPU acceleration.

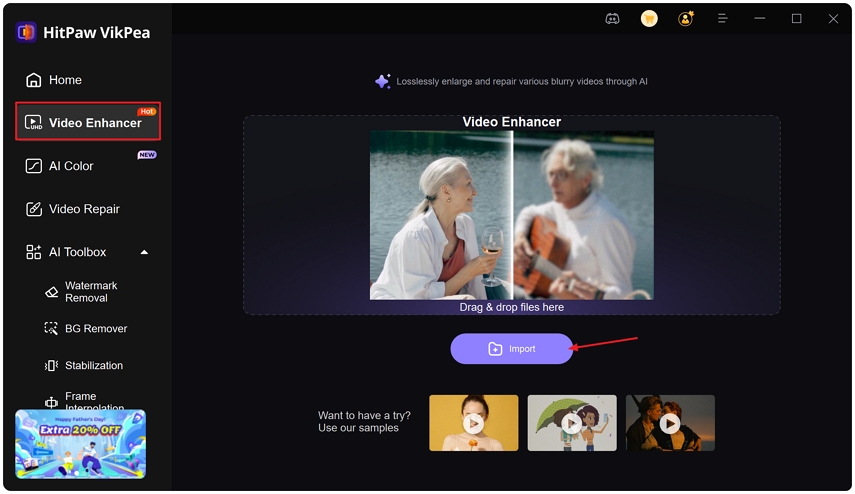

Step 1.Download and launch HitPaw VikPea on your computer. Import your video by clicking on "Choose file" or drag and drop.

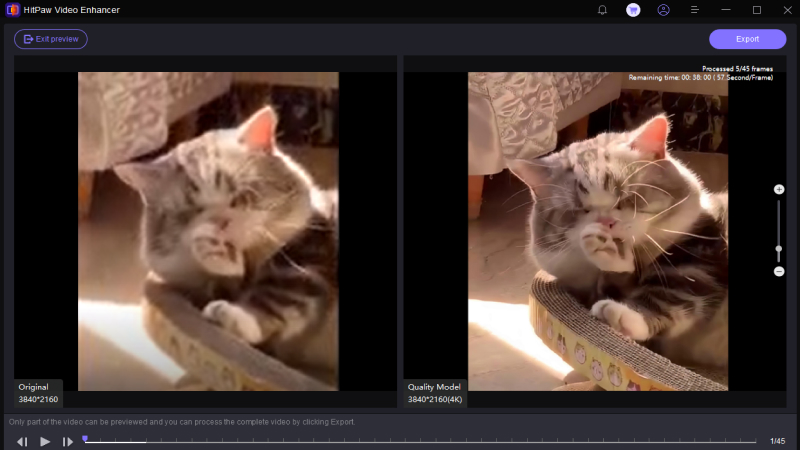

Step 2.Select from multiple AI models such as General, Sharpen, Portrait, and Video Quality Repair. Pick the model that matches your enhancement goals.

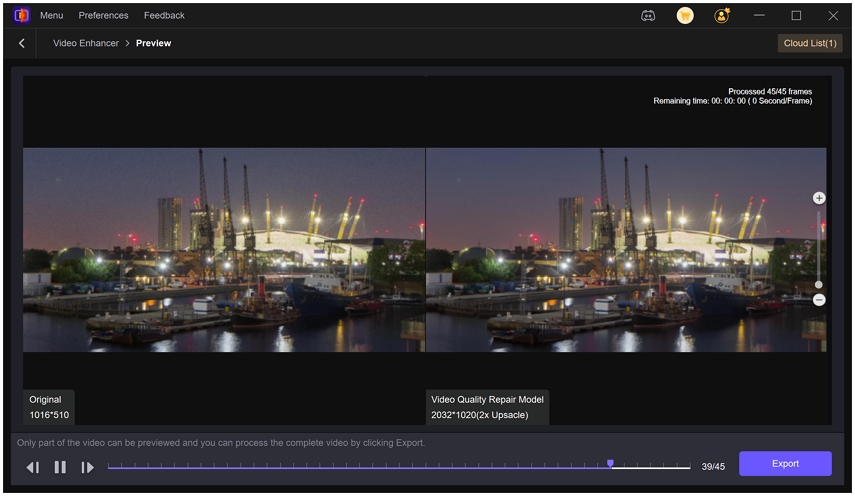

Step 3.Under Export Settings, choose the desired resolution. VikPea supports upscaling up to 4K or 8K. Preview changes, then press Export to save your enhanced video.

Frequently Asked Questions About Google Glasses

No. Current Google designs favor a small, glanceable in-lens HUD for private notifications rather than a wide field-of-view holographic overlay. The emphasis is on subtle, glanceable information that does not obstruct normal vision.

Not yet. Google AI glass devices are intended as a companion to your Android phone for compute, connectivity, and app continuity. They are built to complement phones, not fully replace them at launch.

Google focuses on openness, fashion partnerships, and Gemini-driven micro-workflows to solve real tasks in daily life. Meta emphasizes social and lightweight camera experiences while Apple aims for premium comfort and a deeply integrated ecosystem. Each company targets different priorities: Google aims for everyday AI assistance, Meta targets social AR usage, and Apple prioritizes high fidelity spatial computing.

Conclusion

Google AI glasses bring Gemini intelligence to a wearable form factor through Android XR, optional in-lens displays, and partner eyewear designs. With real-time translation, voice camera controls, and glanceable AI help, Google aims to make AI more natural and wearable. Public availability is expected in 2025 with staged rollouts and varied pricing depending on display options. If you plan to record and share wearable footage from Google's new AI glasses, tools like HitPaw VikPea can polish those clips for clearer, more cinematic results.

Leave a Comment

Create your review for HitPaw articles