TurboDiffusion and the Future of Fast AI Video Generation

At the end of 2025, ShengShu Technology and Tsinghua University's TSAIL Lab officially open-sourced an acceleration framework called TurboDiffusion.

The release immediately drew widespread attention in the AI community. Many researchers and developers described it as the "DeepSeek moment" of AI video generation - a milestone that fundamentally changed expectations around speed, efficiency, and real-world usability.

In this article, we explore what TurboDiffusion is, how it differs from previous acceleration approaches, how it can be used, and why it matters for the future of AI video creation.

Part 1. What Is TurboDiffusion?

TurboDiffusion is an open-source acceleration framework designed specifically for diffusion-based AI video generation.

Its goal is simple but ambitious:

"Reduce video generation time by orders of magnitude while preserving visual quality."

Rather than introducing a brand-new model architecture, TurboDiffusion focuses on optimizing inference, making existing text-to-video and image-to-video diffusion models dramatically faster and more deployable.

Core Characteristics:

- Built for diffusion-based video models

- Targets inference-time acceleration

- Supports high-quality, multi-frame video generation

- Designed for real-world production, not just research benchmarks

- Open-source and extensible

Part 2. TurboDiffusion: Principles, Advantages, and What Makes It Different

Before TurboDiffusion, most AI video "acceleration" relied on:

- Reducing resolution or frame count

- Aggressive frame skipping

- Heavy model pruning at the cost of quality

- Hardware brute force (more GPUs, more memory)

These approaches often resulted in:

- Visible quality degradation

- Unstable motion

- Limited scalability

TurboDiffusion takes a fundamentally different approach. Instead of simplifying the output, it optimizes the internal computation path of diffusion models.

Core Principles Behind TurboDiffusion

TurboDiffusion combines several advanced ideas into a unified framework:

- Step Reduction via Distillation

- Efficient Attention Mechanisms

- Low-Bit Quantization (W8A8)

- Inference-Oriented Design

Traditional diffusion models require dozens or hundreds of steps. TurboDiffusion reduces the number of sampling steps while preserving generation accuracy.

It replaces heavy attention calculations with optimized and sparse variants, significantly lowering compute cost.

By quantizing weights and activations, TurboDiffusion reduces memory usage and accelerates inference without obvious quality loss.

Unlike training-time optimizations, TurboDiffusion is built specifically for fast deployment and real-world usage.

Key Advantages

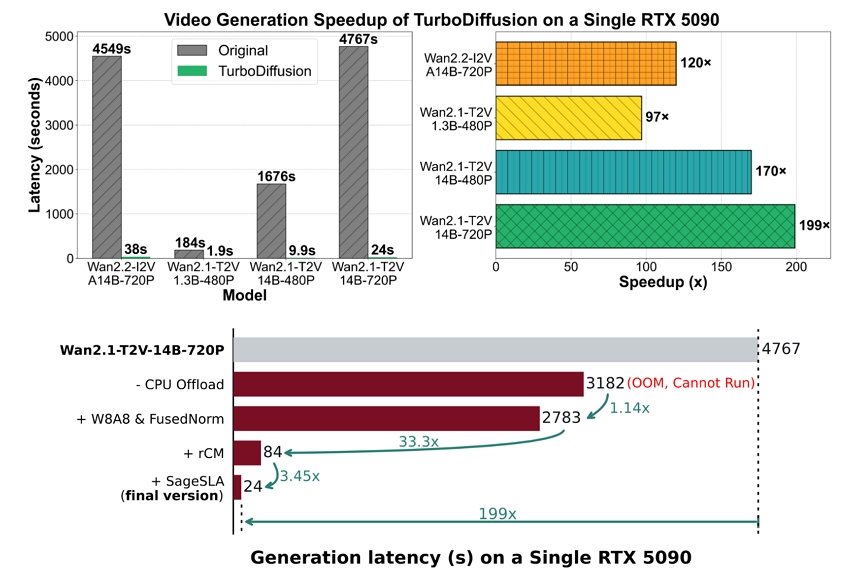

- 100×-200× faster video generation

- Near-lossless visual quality

- Second-level generation on a single GPU

- Compatible with multiple video diffusion architectures

- Scalable from research demos to commercial platforms

This is why TurboDiffusion is often compared to a "DeepSeek moment" - it changes expectations for what AI video generation speed should look like.

Part 3. How TurboDiffusion Actually Works

TurboDiffusion is officially released as an open-source project and can be accessed through:

- Official GitHub repositories maintained by the research team

- Research documentation and demo implementations

- Community-adapted versions for popular video diffusion models

Because it is framework-level technology, TurboDiffusion is mainly targeted at:

- AI researchers

- Model developers

- Platforms building video generation services

How TurboDiffusion Is Used in Practice

In real-world usage, TurboDiffusion is typically:

- Integrated into an existing video diffusion pipeline

- Applied at inference time

- Tuned for specific models or hardware setups

For most end users, TurboDiffusion works behind the scenes. You benefit from it not by installing it yourself, but by using AI video tools that already integrate accelerated models.

Part 4. What TurboDiffusion Means for the AI Video Industry

TurboDiffusion represents more than a speed boost - it signals a structural shift in AI video creation.

Industry-Level Impact

- From minutes to seconds: AI video becomes interactive rather than batch-based

- Lower hardware barriers: Fewer GPUs, lower costs

- Creator-first workflows: Faster previews, quicker iterations

- Commercial scalability: Real-time and high-volume generation becomes feasible

In short, acceleration is no longer optional. It is becoming a baseline requirement for modern AI video platforms.

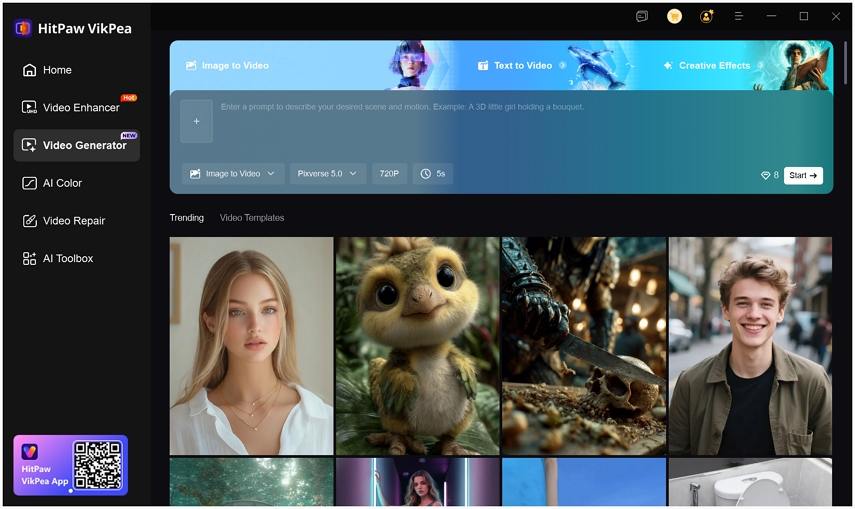

Part 5. Pro Tip: Generate Accelerated AI Videos with HitPaw VikPea

While TurboDiffusion is a foundational technology, most users want a ready-to-use solution rather than a research framework.

This is where tools like HitPaw VikPea come in.

HitPaw VikPea has integrated the world's most advanced video models. Many of these models, such as Kling 2.5 Turbo and Veo 3 Fast, utilize the same distillation and quantization principles found in TurboDiffusion to deliver high-speed results.

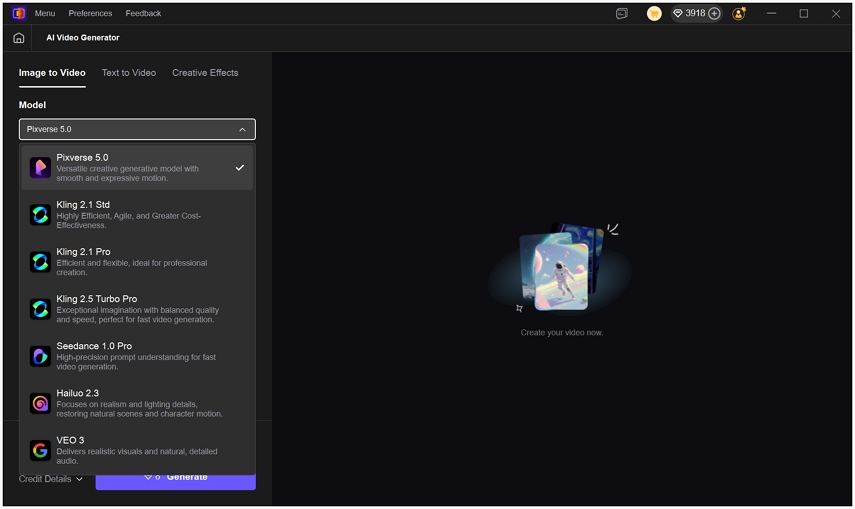

- Integrated Model Library: Access Kling 2.1, Kling 2.5 Turbo, Hailuo 2.3, Pixverse 5.0, and VEO 3 all in one interface.

- Dual Power: Not only can it generate video from text or images, but its "Enhancer" module can upscale those results to 4K or 8K resolution.

- Speed & Quality: Use the "Turbo" versions for rapid prototyping and the "Pro" versions for final cinematic rendering.

- Video Customization: Set up the video size, ratio and duration for different platforms such as TikTok, Instagram, etc.

How to Generate AI Video with HitPaw VikPea:

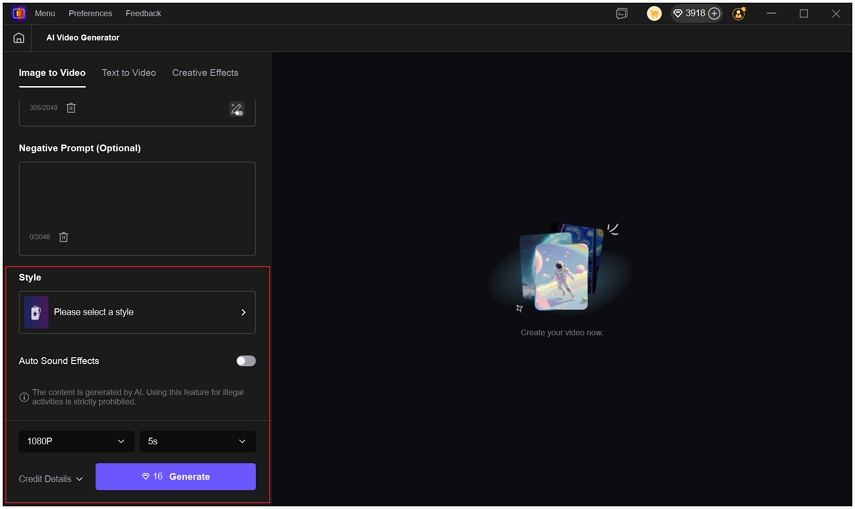

Step 1. Launch & Select:

Open HitPaw VikPea and click on the AI Video Generator module. Select Image to Text, Video to Text or Creative Effects.

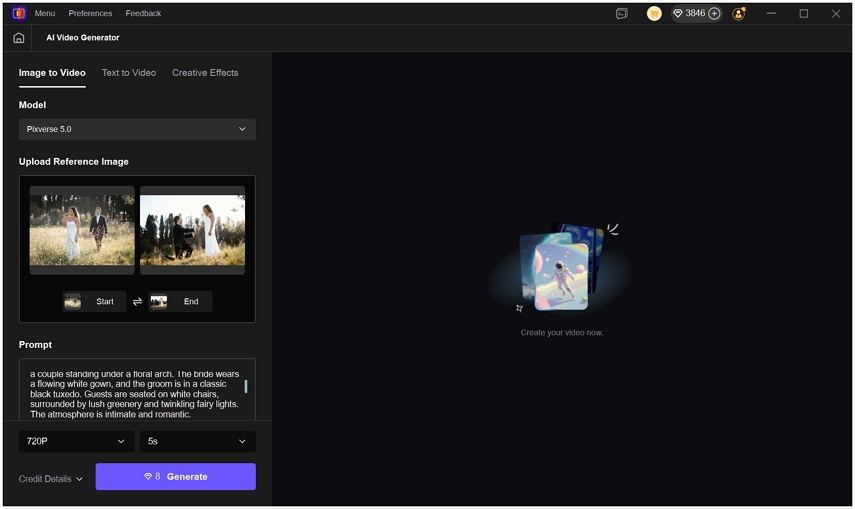

Step 2. Input Prompt:

Enter your text description or upload a reference image for Image-to-Video generation.

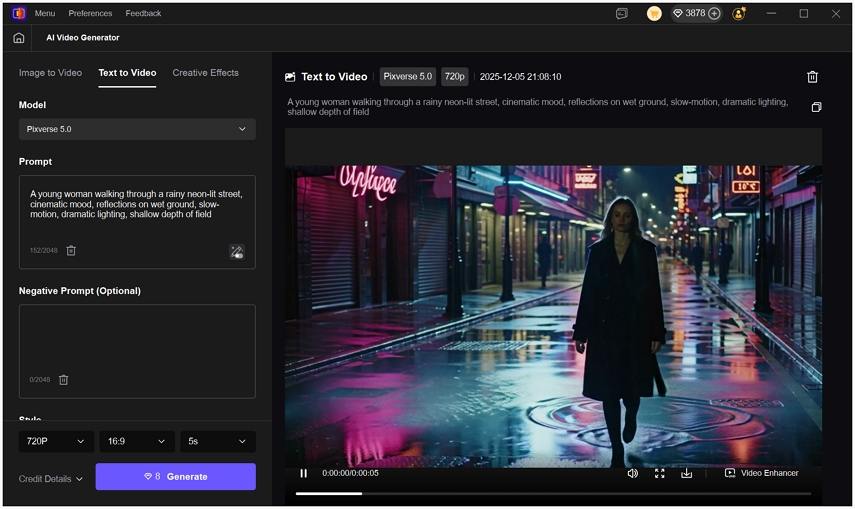

Step 3. Choose Your Model:

Select a high-speed model like Kling 2.5 Turbo for near-instant results.

Step 4. Output Settings

Set up the video duration, aspect ratio, and resolution. You can also select a style such as Anime.

Step 5. Generate & Enhance: Click "Generate." Once finished, you can download the video to local, or click Video Enhancer button to send the clip directly to the Enhancer module to sharpen details or remove noise.

Conclusion

TurboDiffusion marks a defining moment in the evolution of AI video generation. By solving the long-standing speed bottleneck of diffusion models, it pushes AI video from experimental to practical.

As acceleration-first design becomes the industry standard, tools like HitPaw VikPea demonstrate how these breakthroughs translate into real-world creative workflows - combining fast AI video generation, advanced enhancement, and multiple optimized models in one platform.

Leave a Comment

Create your review for HitPaw articles