Best Open Source AI Video Generators You Should Try in 2026

Artificial Intelligence has transformed video production, giving creators access to tools that can generate animations, cinematic clips, and visual effects with minimal effort. Among these tools, open source AI video generators are becoming especially popular because they are free to use, customizable, and supported by large developer communities. This article explores the best open source AI video generators available today, their unique strengths, and how they can empower content creators. Finally, we will also show you how to take your AI-generated videos to the next level with a professional video enhancement tool that improves clarity and resolution.

Part 1: What Are Open Source AI Video Generators

Open source AI video generators are software tools released under open licenses, allowing anyone to access, modify, and improve the underlying code. They use machine learning models trained on large datasets to transform prompts-whether text or images-into video outputs.

The benefits are clear:

- Cost-effectiveness - no expensive subscriptions.

- Flexibility - creators can fine-tune models on specific styles or datasets.

- Transparency - code and training data are open for review.

- Community Support - active forums, GitHub repos, and Hugging Face spaces.

However, they also present challenges. Many require high GPU power, setups can be technical, and most outputs are limited to short clips. This makes pairing them with video enhancement software crucial for professional use.

Part 2: Top Open Source AI Video Generators

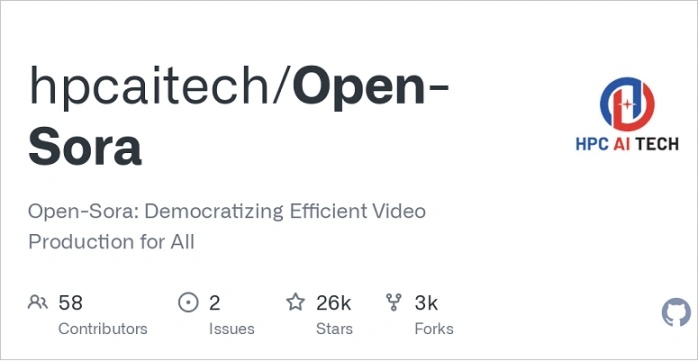

1. OpenAI Sora Community Implementations

Although OpenAI's official Sora model is not fully open source, many developers have created community-driven reimplementations that approximate its functionality. These tools focus on physics-aware video generation, producing realistic human and object movements. With Sora-inspired systems, you can input detailed prompts like "a golden retriever playing fetch on the beach at sunset" and receive short cinematic clips that reflect real-world physics.

- Physics-Aware Motion: Better handling of object interactions and natural movement.

- Multi-Framework Support: Implementations exist for PyTorch and JAX backends.

- Lightweight Deployment: Designed so smaller teams can run experiments on mid-range hardware.

- Expandable Pipelines: Plugin architecture for motion priors, style adapters, and custom samplers.

Best For: researchers and advanced creators who want cutting-edge text-to-video performance.

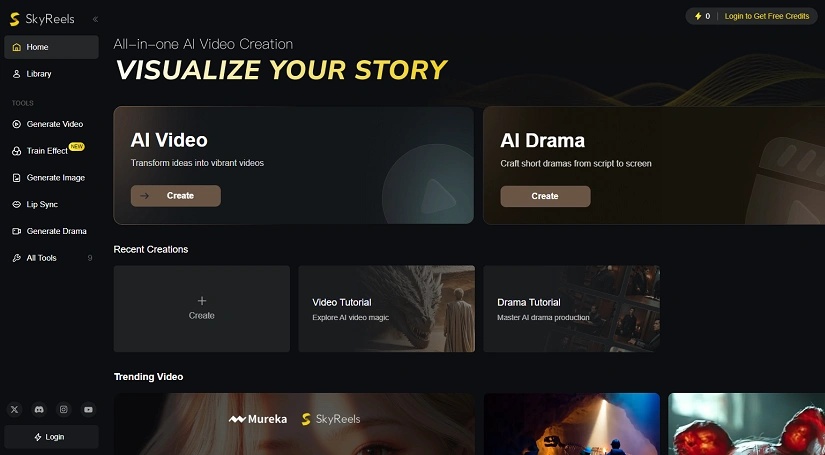

2. SkyReels

SkyReels V2 is a lightweight, open source model designed for fast and creative short-form content. It's widely available on Hugging Face, with a thriving developer community that frequently updates pre-trained checkpoints. SkyReels focuses on speed and simplicity, generating stylized video sequences in just seconds. Its playful aesthetic makes it popular among meme creators, indie filmmakers, and social media content designers.

- Transformer-style Prompt Encoding: Better comprehension of complex prompts.

- Improved Frame Consistency (V2): Reduces flicker and character drift across frames.

- Low Resource Footprint: Designed for desktop usage without enterprise GPUs.

- Style Controls and Prompt Extensions: Allows finetuned control over camera motion, color palette, and mood.

Best For: TikTok or Instagram creators who want to experiment with fast-turnaround AI video content.

3. Stable Video Diffusion

Created by Stability AI, Stable Video Diffusion builds upon the success of Stable Diffusion for images. It can transform both text prompts and still images into moving video sequences. With features like smooth frame interpolation and style control, creators can generate animations that look hand-drawn or cinematic depending on the settings. Because it's fully open source, developers can train it further on their own datasets to adapt styles like anime, film noir, or 3D renders.

- Image→Video Pipeline: Animate still images or create motion from prompt sequences.

- Large Community & Tooling: Many community forks, samplers, and style checkpoints available.

- Style and Control: Strongest in applying visual styles (painterly, anime, photoreal) consistently across frames.

- Temporal Conditioning & Flow Guidance: Uses motion guidance or optical flow to maintain frame coherence.

Best For: animators, experimental creators, and developers who want a versatile and hackable model.

4. ModelScope Text-to-Video

Developed by Alibaba's DAMO Academy, ModelScope T2V is one of the earliest and most accessible text-to-video generators. It's hosted on Hugging Face, making it easy to try in-browser without local installation. While its video quality is limited to short 2-5 second clips, it provides reliable prompt adherence and is a good entry point for beginners. Many creators also use it as a base layer, then enhance and extend the clips with editing software.

- User-Friendly Pipeline: Ready-made scripts and hosted demos for instant trials.

- Stable Diffusion-style Diffusers: Uses diffusion architectures optimized for text prompt fidelity.

- Hugging Face & ModelScope Integration: Easy to grab weights, try in browser, or run notebooks.

- Fine-Tuning & Transfer: Supports common fine-tuning techniques like LoRA.

Best For: educators, hobbyists, and first-time users experimenting with AI video.

5. VideoCrafter

VideoCrafter is a multi-modal open source framework focused on producing short cinematic clips from text, still images, or by continuing existing video. It emphasizes preserving image detail while adding natural motion - a good fit when you want to animate illustrations or produce narrative snippets with coherent scene structure.

- Multi-Modal Generation: Text→video, image→video (animate a single image), and video continuation (extend an input clip).

- Diffusion-Based Core: Uses diffusion techniques to add motion while preserving composition and texture of the source.

- Cinematic Output: Tunable to produce story-driven clips with good spatial and temporal coherence.

- Customizable Parameters: Resolution, frame rate, number of diffusion steps, and guidance scale for prompt adherence.

- Plug-in Friendly: Commonly integrated with FFmpeg for encoding, plus optional post-processing hooks for color grading.

Best Use Cases: Demoing storyboards where plot continuity matters, producing concept scenes for short films, research into language→visual sequence alignment.

6. CogVideoX

CogVideoX is a member of the transformer-driven text-to-video family built to scale: emphasis on longer contextual prompts, narrative coherence across multiple scenes, and better handling of complex actions and interactions.

- Transformer Backbone: Uses autoregressive or conditional transformer layers to model longer sequences and multi-turn actions.

- Text-to-Video Strength: Better at following verbose descriptions with multiple actors and actions.

- Fine-Tuning Ready: Designed to accept domain-specific fine-tuning (LoRA style or full checkpoint retraining).

- Temporal Conditioning Tools: Scene segmentation and action tokens to maintain continuity.

Best for: Demoing storyboards where plot continuity matters, producing concept scenes for short films, research into language - visual sequence alignment.

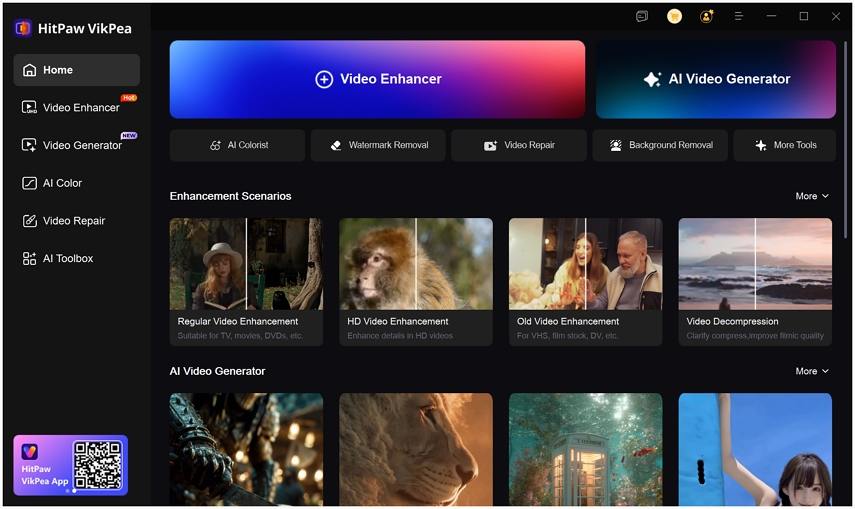

Part 3: A Practical Alternative to Open-Source AI Video Generators

Open-source AI video generators are popular for their flexibility and community-driven development, but they often require complex setup, powerful hardware, and technical expertise. For creators who want similar AI video generation capabilities without dealing with installations, dependencies, or model tuning, HitPaw VikPea offers a more accessible alternative.

HitPaw VikPea is a ready-to-use AI video generator that lets users create videos from text, images, or creative templates, while providing access to multiple advanced AI models. It bridges the gap between experimental open-source tools and polished commercial software, making AI video creation faster and more user-friendly.

Core AI Video Generator Features

- Text to Video – Turn written prompts into AI-generated videos in just a few clicks.

- Image to Video– Animate still images into dynamic video content.

- Template-Based Video Creation – Generate creative videos quickly using pre-designed templates.

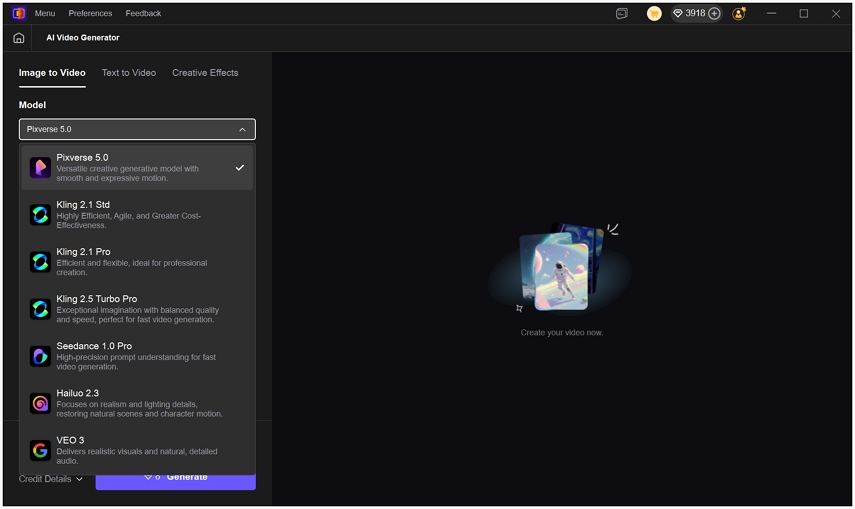

- Multiple AI Models – Support the most cutting-edge AI models like Kling 2.1, Kling 2.5 Turbo, Hailuo 2.3, Pixverse 5.0, VEO 3, and Seedance 1.0 Pro.

- Social Media–Ready Output – Export videos in multiple aspect ratios for popular platforms.

- AI Video Enhancer - The built-in video enhancer module enables users to upscale video quality up to 8K.

- User-Friendly - Beginner-friendly interface with professional-level results.

-

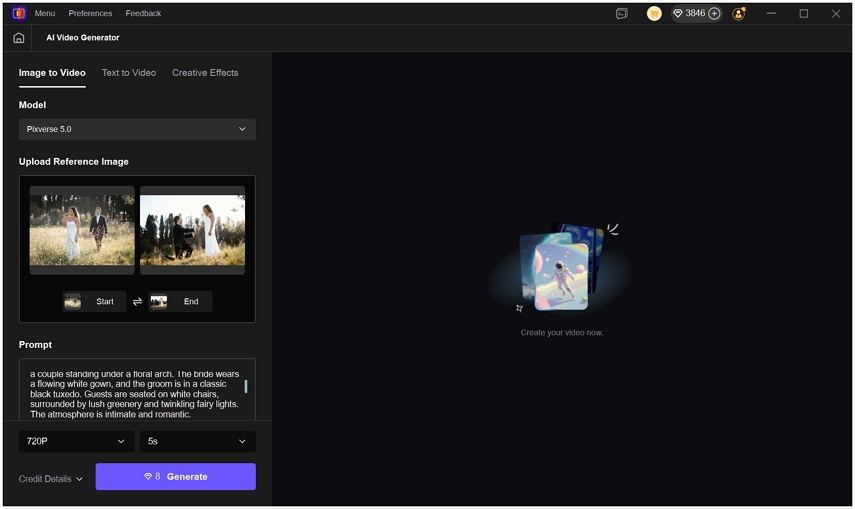

1.Launch HitPaw VikPea and choose Video Generator module from the main interface or from the left menu. Select Image to Video, Text to Video or Creative Effects.

-

2.Pick an AI model based on your desired video style and output quality.

-

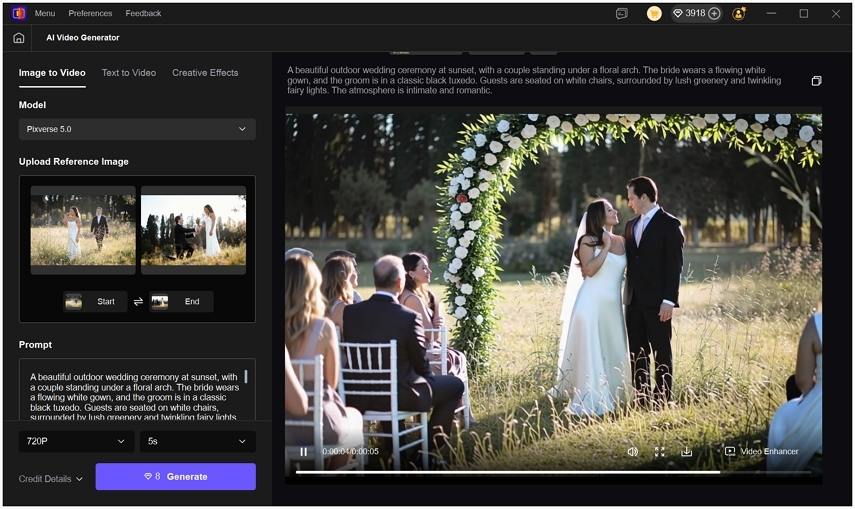

3. Upload references images and enter the prompt description.

-

4. Click Generate to start creating the video.After generation process is completed, you can preview the AI-generated video and make adjustments if needed.

-

5. Finally, click the download icon to save the video to computer. Or you can import the video to the Video Enhancer module to improve quality.

How to Use HitPaw VikPea to Enhance AI Videos

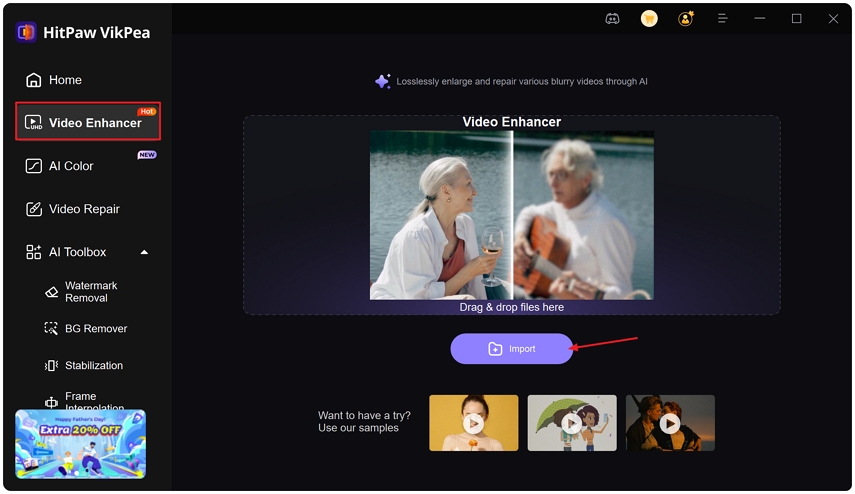

Step 1: Luanch HitPaw VikPea and choose Video Enhancer feature. Import the video you'd like to upscale.

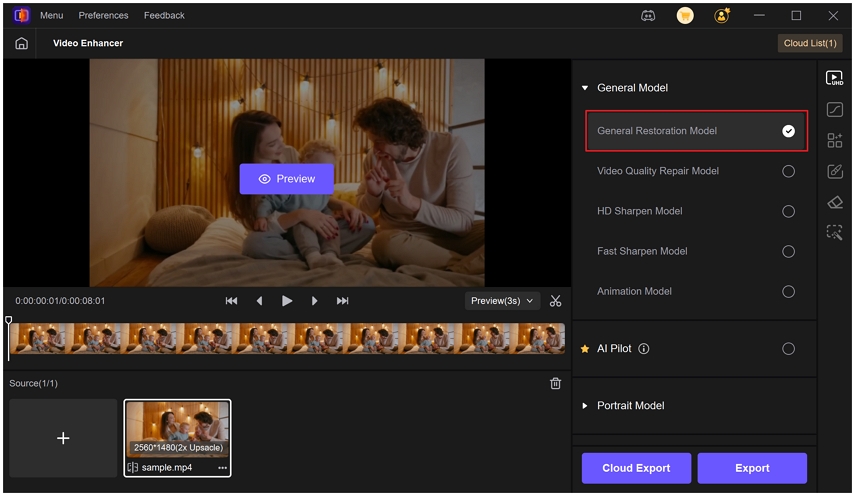

Step 2: Select Enhancement Model depending on your video type, here you can choose Generaral Restoration Model for overall quality upscaling.

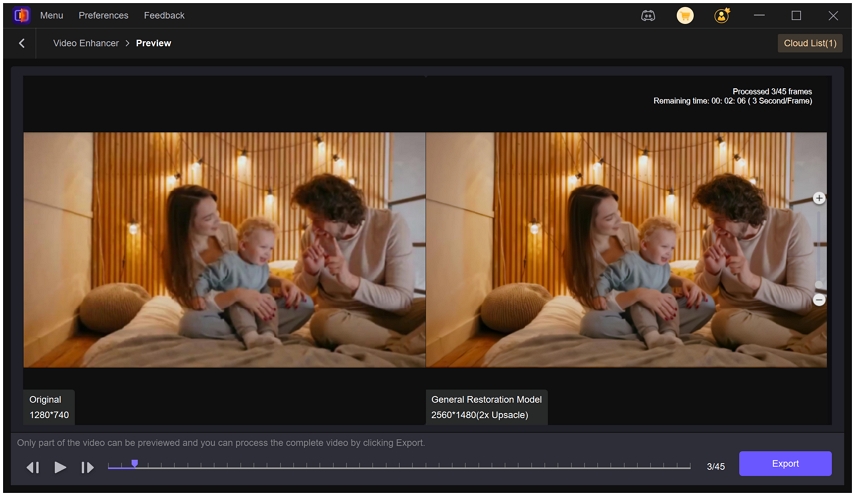

Step 3: Preview Results before exporting. Then click Export button to save the video in 4K or 8K for professional quality.

FAQs About Open Source AI Video Generators

Yes, most open source models are free, but some may require powerful GPUs for best results.

ModelScope is often the easiest entry point thanks to its ready-to-use pipelines.

Yes, but always download from trusted sources like Hugging Face or GitHub to avoid malicious versions.

Conclusion

Open-source AI video generators are a great choice for developers and advanced users who want maximum control, customization, and access to cutting-edge models. However, they often come with a steep learning curve, complex setup processes, and higher hardware requirements, which can slow down content creation.

For creators who prioritize speed, ease of use, and reliable results, HitPaw VikPea stands out as a practical alternative. It delivers powerful AI video generation—including text-to-video, image-to-video, and template-based creation—without the technical barriers of open-source tools. Combined with multiple AI models, social-media-ready exports, and built-in enhancement features, VikPea offers a balanced solution for turning ideas into high-quality videos efficiently.

Leave a Comment

Create your review for HitPaw articles